Leave GoDaddy and Go Completely Serverless Using Just Cloudflare and S3 Buckets: Part Two

This is the continuation of Part One. The goal, again, is:

In Part Two, I show you how to handle the .htaccess file, use Cloudflare Workers to serve dynamic content, create cron jobs, protect your S3 buckets, cache hard, handle comments, set up a WAF and firewall, and cover more advanced topics.

Results

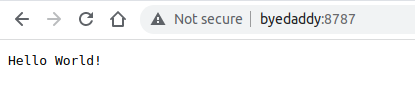

Here is my serverless WordPress website, now:

Table of Contents

Part Two

- Serverless: Serve a WordPress Website without a Server

- The Plan for Apache’s Htaccess

- Step 15. Set up a Cloudflare Worker Dev Environment

- Step 16. Serve Dynamic Content, Formerly PHP Scripts

- Step 17. Publish a Cloudflare Worker

- Step 18. Prevent Bots from Hammering your Workers

- Step 19. Cron Jobs without a Server

- Step 20. Move Comments and Schedules to a Third-Party

- Advanced Serverless Options

- Advanced Concepts and Gotchas

- Checklist: Move Sites from GoDaddy

- The GoDaddy Experience Simulator

- Results and Summary

- More Reasons to Leave GoDaddy

- GoDaddy is Indirectly Vulnerable to Hacks

- Many Domains Point to the GoDaddy Shared Server

- Motivation to Leave

- Let’s Get Organized

- High-Level Plan of Action

- Step 1. Calculate the Monthly Cost with AWS S3

- Step 2. Back Up Everything

- Step 3. Test the Cloudflare Email Routing Service

- Step 4. (Optional) Test a Third-Party Email Forwarder

- Step 5. Create a Virtual Machine Running Docker

- Step 6. Install Docker with Cloud-Init

- Step 7. Replicate GoDaddy’s WordPress Environment

- Step 8. Generate Static HTML Offline

- Step 9. Sync Static Production Files to S3

- Step 10. Verify the 404 Page Works

- Step 11. Allow Cross-Origin Resource Sharing (CORS)

- Step 12. Allow Subdomain Virtual Hosts with S3

- Step 13. Query Strings and S3 Bucket Keys

- Step 14. Enable HTTPS Websites with HTTP-Only S3

- Summary of AWS S3 Bucket CLI commands

Serverless: Serve a WordPress Website without a Server

Congratulations. At this point, we have a working website and do not even need Workers or Lambdas: HTML and images load fast, virtual subdomains work, the 404 page functions, and the whole site responds via HTTPS.

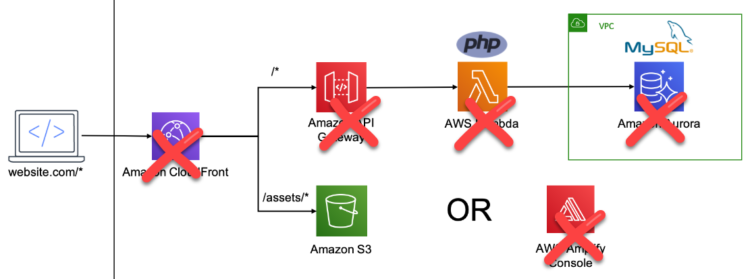

Some people throw a lot of services at serverless, overcomplicating serverless. But, why? Before you read an article that talks you into using Route 53, API Gateway, Lambdas, CloudFront, CloudWatch, RDS, and Terraform to hold it all together, stop and ask yourself what you need, not what is in vogue for a résumé.

My vision of serverless looks closer to this:

Where to start? Notice that in the production/ folder there is a remnant .htaccess file containing directives such as:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | RewriteEngine On RewriteBase / # 403 Errors ErrorDocument 403 "<html><head><title>Forbidden</title></head><body style=\"padding:40px\"><h1>Apologies</h1>I'm sorry, but this page is not allowed to be viewed.<br><br>Terribly sorry for the inconvenience. If you feel this is in error, please continue on to <strong>InnisfailApartments.com</strong> and accept my sincerest apologies.</body></html>" # Error documents ErrorDocument 404 /not-found/ ErrorDocument 410 "Gone." # Block bad requests RewriteCond %{QUERY_STRING} wp-config [NC,OR] RewriteCond %{REQUEST_URI} wp-login [NC,OR] RewriteCond %{REQUEST_URI} wp-admin [NC,OR] RewriteCond %{REQUEST_URI} dl-skin [NC,OR] RewriteCond %{REQUEST_URI} timthumb [NC] RewriteRule .* - [F] # Block bad bots RewriteCond %{HTTP_USER_AGENT} Baiduspider [NC] RewriteRule .* - [F] ... |

There are more directives—different cache timings for various assets, a rewrite rule to redirect www to the root domain, rules to block bad bots, and so forth. These are handy Apache rules, but do we still need all of them?

My Identified Serverless Needs

Before following anyone’s “Set up Serverless in 10 Minutes” article, I need to think through what I actually need in a serverless setup to get away from GoDaddy without becoming a full-time SRE1.

Do I need a gateway? Am I just trying to be cool by throwing every service at the problem? Am I fooling myself into thinking that if every AWS service touches my serverless site it will make me marketable?

The best solution is one that works and keeps working.

Here is what I have identified as my needs:

- Serve a custom 404 page.

- Honor the

accept-encoding: gzip, deflate, brrequest.2 - Achieve great SEO performance.

- Redirect moved pages over time.

- Serve vanity affiliate links.

- Serve tracking links in emails.

- Run cron jobs with custom PHP.

- Collect information with a 1×1 pixel in PHP.

- Mitigate bot scraping.

- Publish the site and forget it.

- Keep backups.

Some Htaccess Thought Experiments:

Now that I’ve listed my serverless requirements, we can brainstorm how to handle the .htaccess directives:

- If we simply delete the

.htaccessfile, then:- No rewrites at all.

- No 401, 403, 301, 302, 500, etc. response codes—ever.

- Only 200 and 404 response codes are natively supported by S3.

- Deleted pages in the future return 404 instead of 410 (Gone).

- It’s impossible to

301-redirect pages—only duplicate content remains. - Cloudflare allows only three Page Rules, and one is already used for HTTP → HTTPS.

- We will face major SEO issues in the future.

- If we run a thin Apache server, then:

- We’ll need a host or an EC2 instance.

- It’ll be slower than serving an

index.htmldirectly from S3. - It will make me sad—if I must use a server, it should be Nginx.

- If we use an AWS Gateway with an incoming Lambda, then:

- Problem solved, but…

- Each

GETrequest incurs a cold-start Lambda delay (bad for SEO). - All images and assets are either piped through the Lambda or redirected with a 302 (bad for SEO).

- I’d be tightly coupled to AWS and the fragile glue between its services.

- I’d need to write a mini-server in Python or Node, hard-code and maintain rules, and then…

- I will cry myself into a coma.

- If we serve static files from S3 and use the 50 allowed redirection rules, then:

- 404 errors are easy to handle.

- Maintaining a JSON file of URL redirects is manageable.

- Still no status-code headers—just 302 responses.

- S3 accepts only HTTP requests and drops HTTPS.

- S3 doesn’t use

gzipto return objects. - Metadata (Last-Modified, ETag) can be set, but only via standalone

PUTrequests. - Easy redirects can go in JSON, and 410-Gone rules can live in a Lambda, but…

- I’d have to maintain redirect rules in both S3 and Lambda—two places, deeper coma.

- If we use Cloudflare Pages, then:

- We’d be locked into another vendor’s configuration system.

- You’d immediately notice that we’re not using Vue, Angular, React, Jekyll, or Hugo.

- Those gigabytes of images and assets still need a home—and definitely not GitHub!

- If we use Cloudflare Workers, then:

- We’re still vendor-locked.

- The supported languages are vanilla JavaScript, TypeScript, and Rust.

- It’s still coding a thin server—just in a simpler, Lambda-like Worker.

- We get WAF, caching, redirects, TLS (HTTPS), logging, and more—for free.

The Plan for Apache’s Htaccess

So, what am I going to do with that core Apache file?

- Turn off any Hide My WordPress obfuscation plugins. With static HTML, who cares if anyone sees

/wp-uploads/anymore? A bot can hammer the non-existent/wp-admin/all day long. Most of the rewrite rules will then elegantly vanish. These can all be removed:

1 2 3 4 5 6 7 | # These are now obsolete RewriteRule ^inc/(.*) /inc/$1?yCbHO_hide_my_wp=1234 [QSA,L] RewriteRule ^files/(.*) /files/$1?yCbHO_hide_my_wp=1234 [QSA,L] RewriteRule ^plugins/9a4bf777/(.*) /content/plugins/a3-lazy-load-filters/$1?yCbHO_hide_my_wp=1234 [QSA,L] RewriteRule ^plugins/1ed56861/(.*) /content/plugins/a3-lazy-load/$1?yCbHO_hide_my_wp=1234 [QSA,L] RewriteRule ^plugins/474e0579/(.*) /content/plugins/ank-google-map/$1?yCbHO_hide_my_wp=1234 [QSA,L] ... |

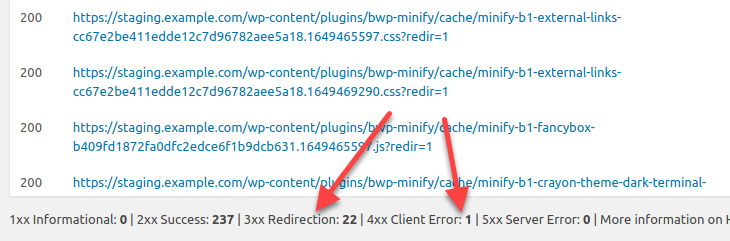

- Turn off local compression. Yes, you read that right. I have an abandoned plugin called BWP Minify that caches content in gzip-compressed files. There are too many ways compression can backfire, especially since S3 assets won’t have a compression header. By turning off compression for offline assets, we ensure HTML, CSS, and JS are stored and crawled correctly for static offline use.

Remove all the wonderful attack-mitigation directives. For a static presentation site hosted on S3, I say go ahead—automated XSS injection? Bot farmers? Bring it on. These can all be removed:

1 2 3 4 5 6 7 8 | # No outside frames, please Header set X-Frame-Options SAMEORIGIN # Prevent MIME-based attacks Header set X-Content-Type-Options "nosniff" # Enable XSS protection Header set X-XSS-Protection "1; mode=block" |

- Set edge cache expiration and browser cache settings in Cloudflare under the caching section, instead of using

.htaccess. These can all be removed:

1 2 3 4 5 6 7 8 9 10 11 | # CSS ExpiresByType text/css "access plus 1 month" # Javascript ExpiresByType application/javascript "access plus 1 month" # JSON ExpiresByType application/json "access plus 30 minutes" # Default directive ExpiresDefault "access plus 1 month" |

Remove all the WordPress rules in

.htaccess.Remove security rules in

.htaccess. These can all be removed:

1 2 3 4 5 6 7 8 9 10 11 | # 403 Errors ErrorDocument 403 "<html><head><title>Forbidden</title></head><body style=\"padding:40px\"><h1>Apologies</h1>We're sorry, but this page is not allowed to be viewed.<br><br>Terribly sorry for the inconvenience. If you feel this is in error, please continue on to <strong>ericdraken.com</strong> and accept our sincerest apologies.</body></html>" # Send 401 errors ErrorDocument 401 "Permission denied." # Require authentication to access staging AuthUserFile "/home/ubuntu/.htpasswds/public_html/innisfailapartments_com/passwd" AuthType Basic AuthName "Staging Area" require valid-user |

Remove all the

410-goneredirects because “currently Google treats 410s (Gone) the same as 404s (Not found), so it’s immaterial to [Google] whether you return one or the other. (ref)”What is left is dynamic redirects and important headers concerning affiliate links. Plus, I will sometimes give a custom URL in an email link (e.g. ericdraken.com/linkedin4/ –> ericdraken.com/) and can detect if the recipient has clicked on it. For this, there has to be some dynamic processing. The remainder of the

.htaccessessentially looks like this:

1 2 3 4 5 6 7 8 9 10 11 | # Affiliate links RewriteRule ^/?out/some-affiliate/?(.*)$ "https://some-affiliate.net/c?id=136" [R=302,L,E=AFFLINK:1] # Do not let robots index or follow /out/ Header always set X-Robots-Tag "noindex, nofollow" env=AFFLINK <IfModule mod_rewrite.c> RewriteEngine On RewriteBase / RewriteRule ^old-page/(.*) /new-page/$1 [QSA,L,R=301] </IfModule> |

For dynamic URL redirects, I will use S3 redirects and explore vanilla JS with Cloudflare Workers. See the next section.

For static redirects, S3 redirects work, as well as using regular HTML redirects like so:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | <!DOCTYPE html> <html> <head> <title>Redirecting...</title> <noscript> <meta http-equiv="refresh" content="0;url=https://example.com/new-page/"> </noscript> <script type="text/javascript"> window.location = "https://example.com/new-page/"; </script> </head> <body> <p>You are being redirected to <a href="https://example.com/new-page/">https://example.com/new-page/</a> </p> </body> </html> |

Summary

For most static WordPress sites, you can just delete the .htaccess file. For typical URL redirecting needs, there are easy solutions like those above. We no longer need security rules, so those can go. If you have a lot of dynamic affiliate links or cloaked URLs, that is when you need S3 redirect rules, Worker JavaScript redirects, or paid Cloudflare redirects. For static redirects, you can effectively use HTML redirects.

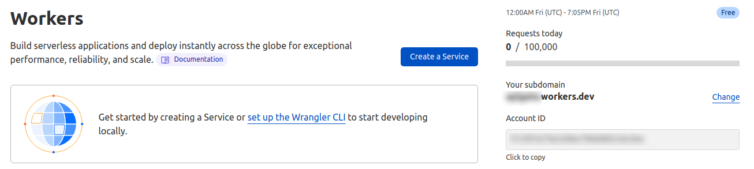

Step 15. Set up a Cloudflare Worker Dev Environment

Say I need something dynamic triggered by a web page. Cloudflare has a Lambda alternative called Workers that runs JavaScript or Rust and has no cold start (there are severe limitations, however).

This is a simpler solution than breaking out IAMs, Roles, Route53, cold-start Lambdas, monitoring, metrics, maybe Terraform, yadda-yadda because Cloudflare takes care of all of that in your account.

FYI, the Worker needs to be uploaded from the console, but the good news is that it can be kept under version control offline. An example of a vanilla JS worker is this:

1 2 3 4 5 | export default { async fetch(request) { return new Response("Hello World!"); }, }; |

Install the Workers CLI

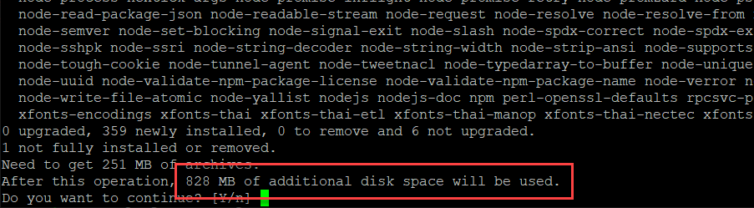

Cloudflare suggests installing Node.

828 MB just to install Node? Are you kidding?

Let’s use a Node Docker container with Wrangler (Cloudflare’s CLI tool) instead. I’ve created a Dockerfile and updated the docker-compose.yaml and .env files (I’ll refine these further later on):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | FROM node:17-alpine ARG USER_UID=1000 ARG USER_GID=1001 USER root # Trick to allow Wrangler to work without Chrome RUN echo -e '\ #!/bin/sh\n\ return 0\ ' > /usr/local/bin/google-chrome \ && chmod +x /usr/local/bin/google-chrome RUN deluser --remove-home node \ && addgroup -S node -g ${USER_GID} \ && adduser -S -G node -u ${USER_UID} node USER node # For Rust ENV USER=node WORKDIR /home/node # As of 2022-04-02, @beta = v2.0 RUN echo '\ {\ "dependencies": {\ "wrangler": "beta"\ }\ }\ ' > /home/node/package.json RUN npm --no-color install ENV PATH="/home/node/node_modules/.bin:${PATH}" ENTRYPOINT [ "/home/node/node_modules/.bin/wrangler" ] |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 | # docker-compose.yaml version: "3.5" x-common-variables: &common-variables VIRTUAL_HOST: ${VIRTUAL_HOST} WORDPRESS_DB_HOST: ${WORDPRESS_DB_HOST} WORDPRESS_DB_USER: ${WORDPRESS_DB_USER} WORDPRESS_DB_PASSWORD: ${WORDPRESS_DB_PASS} WORDPRESS_DB_NAME: ${WORDPRESS_DB_NAME} APACHE_LOG_DIR: "/var/www/logs" services: wordpress: # The exact version used on GoDaddy image: wordpress:4.6.1-php5.6-apache container_name: ${COMPOSE_SITE_NAME}_wordpress volumes: # e.g. /home/ubuntu/godaddy/homedir/public_html/innisfailapartments_com/staging - ${HTDOCS_PATH}:/var/www/html:rw # e.g. /home/ubuntu/godaddy/homedir - ${HOMEDIR_PATH}:/home/${GODADDY_USER}:rw # e.g. /home/ubuntu/staging_innisfailapartments_com/logs - ${LOGS_PATH}:/var/www/logs:rw # You MUST create these files locally before first run - ${LOGS_PATH}/debug.log:/var/www/html/content/debug.log - ${LOGS_PATH}/apache_errors.log:/var/log/apache/error.log - ${HTDOCS_PATH}/../production:/var/www/production - ./php.ini:/usr/local/etc/php/php.ini networks: - default ports: - 80:80 depends_on: - db environment: <<: *common-variables # && apt-get update && apt-get install -y libzip-dev zip && docker-php-ext-install zip # && a2dismod deflate -f command: >- # Set www-data to the same UID as the ByeDaddy ubuntu user; fix ServerName problem sh -c "/usr/sbin/groupmod -g 1001 www-data && /usr/sbin/usermod -u 1000 www-data && ((grep -vq ServerName /etc/apache2/apache2.conf && echo 'ServerName localhost' >> /etc/apache2/apache2.conf) || yes) && ((grep -vq ${VIRTUAL_HOST} /etc/hosts && echo '127.0.0.1 ${VIRTUAL_HOST}' >> /etc/hosts) || yes) && a2dismod deflate -f && docker-entrypoint.sh apache2-foreground" restart: unless-stopped wpcli: image: wordpress:cli-php5.6 container_name: ${COMPOSE_SITE_NAME}_wpcli volumes: # Path to WordPress wp-config.php - ${HTDOCS_PATH}:/app:rw working_dir: /app user: "${USER_UID}:${USER_GID}" networks: - default depends_on: - db environment: <<: *common-variables entrypoint: wp command: "--info" wrangler2: build: context: . dockerfile: cloudflare.Dockerfile args: USER_UID: "${USER_UID}" USER_GID: "${USER_GID}" image: wrangler2:latest container_name: ${COMPOSE_SITE_NAME}_wrangler2 user: "${USER_UID}:${USER_GID}" volumes: - ${HTDOCS_PATH}/../workers:/home/node/workers - wrangler_files:/home/node/.wrangler environment: CLOUDFLARE_API_TOKEN: ${CLOUDFLARE_API_TOKEN} networks: - default ports: - "8787:8787" working_dir: /home/node/workers entrypoint: sh #command: "whoami" db: # The exact version used on GoDaddy image: mysql:5.6 container_name: ${COMPOSE_SITE_NAME}_mysql volumes: - db_files:/var/lib/mysql # Load the initial SQL dump into the DB when it is created # /home/ubuntu/godaddy/sql/innisfailapartments_wp.sql - ${SQL_DUMP_FILE}:/docker-entrypoint-initdb.d/dump.sql networks: - default environment: MYSQL_ROOT_PASSWORD: ${WORDPRESS_DB_PASS} MYSQL_USER: ${WORDPRESS_DB_USER} MYSQL_PASSWORD: ${WORDPRESS_DB_PASS} MYSQL_DATABASE: ${WORDPRESS_DB_NAME} restart: unless-stopped volumes: # Persist the DB data between restarts db_files: wrangler_files: networks: default: name: ${COMPOSE_SITE_NAME}_net |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | # .env USER_UID=1000 USER_GID=1001 VIRTUAL_HOST=staging.innisfailapartments.com COMPOSE_SITE_NAME=staging_innisfailapartments_com # Taken from wp-config.php WORDPRESS_DB_HOST=db WORDPRESS_DB_USER=innisfail WORDPRESS_DB_PASS=xxxxx WORDPRESS_DB_NAME=innisfailapartments_wp GODADDY_USER=xxxx HOMEDIR_PATH=/home/ubuntu/godaddy/homedir HTDOCS_PATH=/home/ubuntu/godaddy/homedir/public_html/innisfailapartments_com/staging LOGS_PATH=/home/ubuntu/staging_innisfailapartments_com/logs SQL_DUMP_FILE=/home/ubuntu/godaddy/mysql/innisfailapartments_wp.sql CLOUDFLARE_API_TOKEN=xxxxxx |

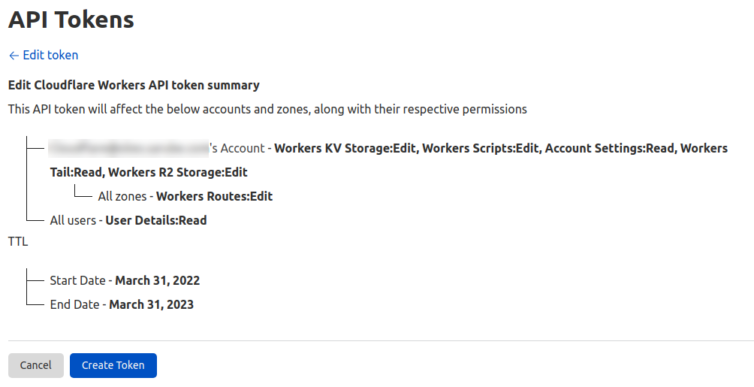

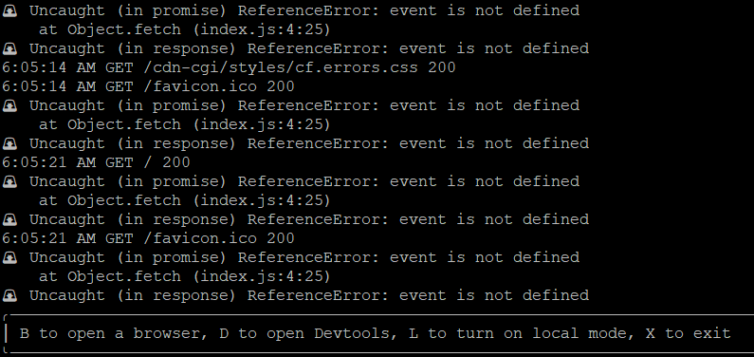

Why is this so cool? Now I can run docker-compose run --rm wrangler2 and communicate with Cloudflare Workers from the VM. I can also test my Workers offline in dev mode. Awesome. First, I’ll need to create a Workers token in the Cloudflare dashboard.

CLOUDFLARE_API_TOKEN as an environment variable to the Docker container.I’ve created a sample Worker and am running it locally:

1 2 3 4 5 6 | ~/workers $ cd innisfail/ ~/workers/innisfail $ ls CODE_OF_CONDUCT.md LICENSE_MIT index.js wrangler.toml LICENSE_APACHE README.md package.json ~/workers/innisfail $ wrangler dev -ip :: Listening on http://:::8787 |

wrangler dev --ip :: to bind to all IPs—instead of using 0.0.0.0 or 127.0.0.1.

Now we can have fun exploring Workers.

1 2 3 4 5 6 7 8 9 10 | export default { async fetch(request) { return new Response(JSON.stringify({ 'headers':Object.fromEntries(request.headers), 'body':request.body, 'redirect':request.redirect, 'method':request.method }, null, 2)); }, }; |

With rich request information and a map function, we can recreate those .htaccess rules.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | # byedaddy:8787 response { "headers": { "accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9", "accept-encoding": "gzip", "accept-language": "en-US,en;q=0.9", "cache-control": "max-age=0", "cf-connecting-ip": "61.97.46.18", "cf-ew-preview-server": "https://69m73.cfops.net", "cf-ipcountry": "CA", "cf-ray": "6f5fc93ebd6a8417", "cf-visitor": "{\"scheme\":\"https\"}", "connection": "Keep-Alive", "host": "innisfail2.xxxxx.workers.dev", "sec-gpc": "1", "upgrade-insecure-requests": "1", "user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/99.0.4844.51 Safari/537.36", "x-forwarded-proto": "https", "x-real-ip": "60.18.156.198" }, "body": null, "redirect": "manual", "method": "GET" } |

Step 16. Serve Dynamic Content, Formerly PHP Scripts

I have a tracking URL that looks like this:

I created a tracking pixel many years ago, and I’m not even sure it does anything anymore. But it’s supposed to execute a PHP script:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | <?php /** * Eric Draken * Date: 2016-07-23 * Time: 11:31 PM * Desc: Tracking pixel to send visitor information to Twitter * * Updates: * 2016-08-04 - Added re parameter, relaxed referrer policy * 2016-09-09 - Add a last-modified tag */ // Referrer must be from the same domain if( isset($_GET['debug']) || stripos($_SERVER['HTTP_REFERER'], 'innisfailapartments.com') > 0 ) { // Get the real IP if (isset($_SERVER["HTTP_CF_CONNECTING_IP"])) { $_SERVER['REMOTE_ADDR'] = $_SERVER["HTTP_CF_CONNECTING_IP"]; } ... // Send back a transparent pixel header('Last-Modified: ' . gmdate('D, d M Y H:i:s') . ' GMT'); header('Content-Type: image/gif'); echo base64_decode('R0lGODlhAQABAJAAAP8AAAAAACH5BAUQAAAALAAAAAABAAEAAAICBAEAOw=='); |

Okay, so it sends visitor logs to Twitter (for some reason). Back then, Slack wasn’t mainstream, and I was overseas. I think I casually wanted to gauge visitor interest in that site.

Additionally, the active WordPress theme renders HTML based on the User-Agent header (e.g. iPhone, Edge, Chrome, etc.). For example:

1 2 3 | <?php if ( isset( $_SERVER['HTTP_USER_AGENT'] ) && ( false !== strpos( $_SERVER['HTTP_USER_AGENT'], 'MSIE' ) ) ) : ?> <meta http-equiv="X-UA-Compatible" content="IE=edge,chrome=1" /> <?php endif; ?> |

How would I run PHP in a serverless setup?

Some ideas:

- Rewrite the PHP into TypeScript to run in a Worker.

- Easiest to implement and test locally. Cloudflare provides a transpiler.

- Host a tiny PHP-FastCGI server on Vultr, DigitalOcean, Linode, etc., just to execute PHP.

- Too much overhead, but might be necessary in rare cases.

- Run the PHP script in AWS Lambda, which supports PHP.

- A rewrite of the PHP code will still be needed—and possibly more.

- We’d then have both a Lambda and a Worker.

- Use AWS or Cloudflare metrics and decommission the script.4

- Easiest to implement: just use Cloudflare metrics and/or GTM metrics.

- Remove theme elements that inspect headers and switch to responsive CSS.

- A good idea to implement regardless.

Fortunately, I can drop the tracking pixel and scrape detection for this simple website. I found it easy to rewrite some PHP scripts into JavaScript.

Step 17. Publish a Cloudflare Worker

When the 100,000 invocation limit is reached, an error will be returned to the web browser. The good news: you can define multiple routes for a Worker. For example,

https://innisfailapartments.com/pixel/*

can be a route. Let S3 serve 404s and real pages, and invoke the Worker only when needed. Here’s a production Worker that doesn’t do much—except exist:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 | /** * Innisfailapartments.com Worker * Eric Draken, 2022 */ export default { async fetch(request) { // TODO: Use a secret or a key-value in Cloudflare const host = "innisfailapartments.com" const notFound = async () => { return Response.redirect(`https://${host}/not-found/`, 302); }; // Convenience function const pathBeginsWith = (url, pathStart) => { if (typeof url !== 'object') throw new Error("Not a URL"); if (typeof pathStart !== 'string') throw new Error("Not a string"); return url.pathname.startsWith(pathStart); }; try { const url = new URL(request.url.toLowerCase()); const ua = request.headers.get("user-agent") || ""; // Debugging // console.log(`UA: ${ua}`); // console.log(`Country: ${request.cf.country}`); // console.log(`Host: ${request.headers.get("host")}`); /*** Always have a trailing slash ***/ if (!url.pathname.endsWith("/") && !url.pathname.includes(".")) { url.pathname = url.pathname + "/"; return Response.redirect(url.toString(), 302); } /*** Block bad bots ***/ if (ua.match(/baiduspider/g, 'i')) return notFound(); /*** Rewrites ***/ switch (request.method.toUpperCase()) { case "GET": case "HEAD": // Sample of redirects if (pathBeginsWith(url, '/go-home/')) { url.pathname = "/"; url.host = host return Response.redirect(url.toString(), 302); } // By default, every method is 404d to confuse bots and scrapers default: { return notFound(); } } } catch (e) { console.error(e); return new Response(`Server trouble: ${e.message}\n`, { status: 500, statusText: "Internal Server Error" }); } }, }; |

Given the following wrangler.toml:

1 2 3 4 5 6 7 8 9 10 | name = "innisfail" main = "src/index.js" compatibility_date = "2022-04-02" account_id = "xxxxx" workers_dev = true # Run wrangler publish [env.production] # zone_id = "DEPRECATED" route = "workers.innisfailapartments.com/*" |

Publishing is as simple as:

1 2 3 4 5 6 7 8 | ~/workers $ wrangler publish --env production ⛅️ wrangler 0.0.24 -------------------- Uploaded innisfail-production (0.81 sec) Published innisfail-production (3.27 sec) innisfail-production.xxxx.workers.dev workers.innisfailapartments.com/* ~/workers $ |

Additional Workers Topics

Please explore these concepts if you are excited to use Workers for your dynamic needs.

- Durable Objects (paid feature)

- Key-Value Storage (free, 25MB limit, eventual consistency)

- HTMLRewriter (because DOMParser is unavailable)

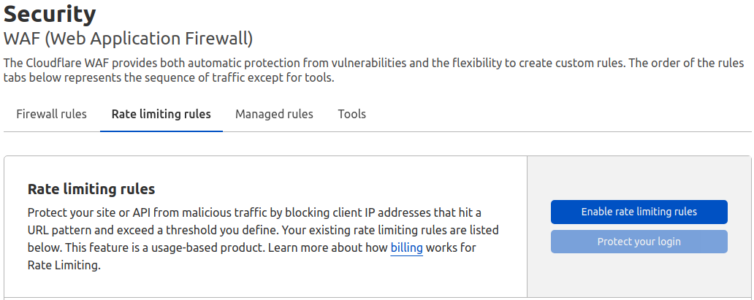

Step 18. Prevent Bots from Hammering Your Workers

Someone with an ax to grind might come along, write a multi-threaded URL blaster, and hammer your Worker until you hit the daily limit—in under 2 minutes.5 Suppose they really dislike you and set a cron job to run every day at 12:01. You’re hooped. Why do I even come up with these scenarios? I have experience with AdWords click fraud.

Mitigation: WAF

You could enable WAF rate limiting, but it costs about a nickel per 10,000 legitimate requests—this can add up quickly.

Mitigation: Short Cache

You could add a Page Rule to cache Worker responses for 30 seconds and ignore query strings for caching. Combine that with a Transform Rule to strip any no-cache headers from clients. This works, but it’s advanced and has trade-offs. It works because a bad actor might spin up 16 threads for I/O to your Worker, but only one thread actually triggers execution. The downside: the Worker might cache things like GeoIP data if you’re not careful.

Mitigation: Polymorphic Worker Endpoints

If you’re a Champion of Cheap—or just enjoy mental gymnastics—you can do something clever for free: Have a Worker create a (cache) object in S3 (not Cloudflare), and configure an S3 Policy to redirect to the Worker only if that object doesn’t exist. After a few seconds, a subsequent Worker request can delete and regenerate that object for caching again. S3 handles the load, and you can cache for as little as 5 seconds.

Want to get cheeky? Have a Worker polymorphically change its endpoint via the Cloudflare API (and update S3 too) to keep the b@stards from hitting the same Worker URL directly.

Or do nothing and be kind to everyone. Or just pay Cloudflare. Your call.

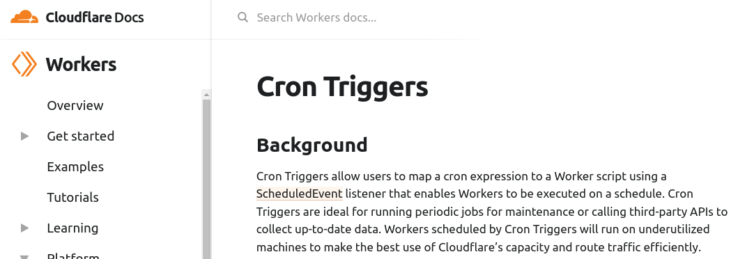

Step 19. Cron Jobs without a Server

How can you schedule cron jobs in this simple serverless design? Cloudflare is awesome—and once again comes to the rescue:

It’s straightforward to create a cron job that periodically invokes a Worker. That Worker can do anything—from rotating logs in S3 to fetching the latest Cloudflare CIDR blocks, to running batch GeoIP lookups on visitor IPs and generating neat graphs. The sky’s the limit.

To leave GoDaddy, I don’t actually need a cron job here.

Step 20. Move Comments and Schedules to a Third Party

If you allow comments on your WordPress site: stop.

There are too many common XSS vulnerabilities where a bad actor leaves a malicious message like:

I love your site! This is so helpful. A+ <img src=”data-uri:base64 1×1-pixel” onload=”alert(‘Add backdoor user.’)”></a>

As an admin, you check the comments “held for moderation,” but as soon as you do, it’s too late. While logged in as an admin, you’ve just triggered JavaScript:

1 2 | I love your site! This is so helpful. A+ <img src="data-uri:base64 1x1-pixel" onload="alert('Add backdoor user.')"></a> |

You’d never know. The hacker—or a bot—can modify your WordPress site with full admin privileges. Trust me.

When you move to S3, you’ll no longer have comments or interactive WordPress scheduling. I suggest using something like Disqus and JaneApp to retain interactivity. For my needs, I prefer no comments at all.

Advanced Serverless Options

Honestly, I just need to get away from GoDaddy. Here are some impressive features that Cloudflare offers—for free. Feel free to let your imagination run wild with the possibilities:

- Serve compressed HTML and assets.

- Enable HTTP/3 with QUIC for better speed.

- Use TLS stapling for faster TLS handshakes.

- Send Early Hints for additional resources on a page.

- Inject GTM and scripts via Cloudflare.

- Create custom firewall rules.

- Set up a scrape shield.

- Block bots from visiting.

- Enable DDoS mitigation (save money on S3 and Workers).

- Dynamically rewrite parts of HTML using Workers.

Cloudflare rocks. Brotli compression rocks too.

Advanced Concepts and Gotchas

SEO Juice

Do you want Google to give SEO juice to example.com.s3-...-amazonaws.com or example.com? Add this to your website on each page:

1 | <link rel="canonical" href="https://example.com/[current page]" /> |

Protect S3

Want to prevent users from visiting example.com.s3-...-amazonaws.com?

Try a combination of these handy header scripts on every page (in a header.php). Combine them or mix and match. I use them all.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | <script type="text/javascript"> if(self.location !== top.location){ top.location.href = self.location.href; } </script> <script type="text/javascript"> if(document.location.href.indexOf(".amazonaws.com") > 0) { top.location.href='<?=$_SERVER["REQUEST_SCHEME"]."://".$_SERVER["SERVER_NAME"]?>'; } </script> <script type="text/javascript"> if(document.location.href.indexOf('<?=$_SERVER["REQUEST_SCHEME"]."://".$_SERVER["SERVER_NAME"]?>') !== 0) { top.location.href='<?=$_SERVER["REQUEST_SCHEME"]."://".$_SERVER["SERVER_NAME"]?>'; } </script> <script type="text/javascript"> if(document.location.href.indexOf("/index.html") > 0) { top.location.href='<?=$_SERVER["REQUEST_SCHEME"]."://".$_SERVER["SERVER_NAME"]?>'; } </script> |

Rewrite HTML

Want to edit server-side HTML on the fly with Cloudflare’s HTMLRewriter? You can do some pretty cool server-side rewrites and the client would never know.

1 2 3 4 5 6 | ... return new HTMLRewriter() .on('div.template', new TemplateHandler()) .on('div.successbuttons', new ButtonHandler()) .on('span.copywrite-year', new CopywriteHandler()) .transform(response); |

However, there’s a major gotcha: you only get 10 ms and limited memory to run a Worker. So forget using DOMParser or complex regex if your goal is to modify HTML templates on the fly. You’ll need to get creative if you want to serve dynamically populated HTML without relying on client-side JavaScript (since SEO strongly favors server-side-rendered content).

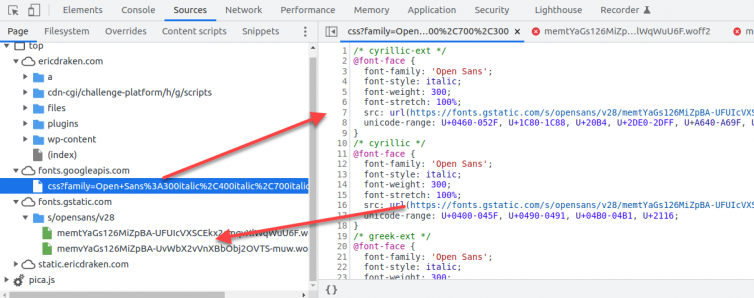

HTMLRewriter. Have fun—it makes a great interview question.Serve Third-Party Fonts ‘Locally’

Let’s serve a cached copy of Google Fonts instead of pulling them from their CDN. Why? Every external domain requires a DNS lookup and a TLS handshake, both of which slow down asset loading—and may delay the site from painting. In DevTools, it’s easy to find the CSS and WOFF files and “host” them yourself.

Checklist: Move Sites from GoDaddy

I operate dozens of sites doing all kinds of things, but I really want to get this site off GoDaddy. Let’s review and automate the steps to break up with GoDaddy.

- Create or reuse the ByeDaddy VM.

- Convenience symlink:

ln -s ~/godaddy/homedir/public_html/example_com ~/example. - Confirm a ‘staging’ folder for the site exists.

- Create a

docker/folder; copy thedocker-compose.yaml,.env,Dockerfile, andphp.ini. - Update the

.envfile with thewp-config.phpvalues. - Create the

logs/folder; adddebug.logandapache_errors.log. - Copy the

aws/folder with the policy and CORS JSON files. - Update the JSON files with the new site domain name.

- Run

aws configureif this is the first time. - Create the two S3 buckets:

example.comandstatic.example.com.12aws s3 mb s3://example.com --region us-west-2aws s3 mb s3://static.example.com --region us-west-2 - Change the buckets to static websites.12aws s3 website s3://example.com/ --index-document index.html --error-document not-found/index.htmlaws s3 website s3://static.example.com/ --index-document index.html --error-document not-found/index.html

- Set the public policy of the buckets.12aws s3api put-bucket-policy --bucket example.com --policy file://policy.jsonaws s3api put-bucket-policy --bucket static.example.com --policy file://static.policy.json

- Allow CORS for the buckets.12aws s3api put-bucket-cors --bucket example.com --cors-configuration file://cors.jsonaws s3api put-bucket-cors --bucket static.example.com --cors-configuration file://cors.json

- Stop any other local sites:

docker-compose downorCtrl+C. - Block commercial plugins from phoning home:123456# wp-config.phpdefine('WP_HTTP_BLOCK_EXTERNAL', true);define('WP_ACCESSIBLE_HOSTS',implode(',', ['*.wordpress.org']));

- Start the WordPress staging site:

docker-compose up; confirm the SQL import succeeded. - Edit

/etc/hosts; add127.0.0.1 example.comand127.0.0.1 static.example.com. - Visit

https://example.com/wp-admin/; verify the site looks correct. - (Optional) Keep yourself logged in longer for local WordPress dev work.12345# Add this to the theme functions.phpadd_filter( 'auth_cookie_expiration', 'keep_me_logged_in_for_1_year' );function keep_me_logged_in_for_1_year( $expirein ) {return 365 * 86400; // 1 year in seconds}

- Edit

.htaccess; remove any.htpasswdreferences. - Disable any Hide My WP plugins or similar.

- Remove any

production/*contents (not the folder itself!). - Generate static HTML and assets to a newly created

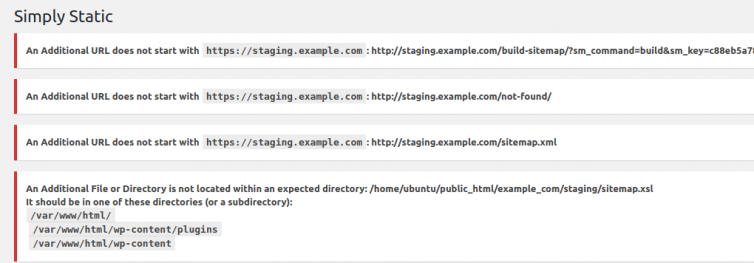

production/folder (confirm/var/www/production/). - Fix any problems with static HTML generation.

- Address any 404 or 30X issues, and especially any 50X errors.

- Generate static HTML and assets, again.

- View the static production site offline with a custom

vhosts.conf.Tip: To view the static HTML files, you will still need avhosts. I recommend modifying the WordPress Docker container to serve theproduction/folder. Don’t forget about CORS, even offline. Hint:123<IfModule mod_headers.c>Header add Access-Control-Allow-Origin "*"</IfModule> - Spot-check for broken images or broken pages.

- (Optional) Replace strings or URLs throughout the site:1docker-compose run --rm wpcli search-replace '/old-files/' '/files/' --dry-run

- (Optional) Create a Worker; test offline; deploy; set the DNS entry if needed.

- (Optional) Create a

redirect.jsonfor S3 for URL redirects and/or Workers. Include the index and error documents again:1234567891011121314151617181920212223242526272829303132{"IndexDocument": {"Suffix": "index.html"},"ErrorDocument": {"Key": "not-found/index.html"},"RoutingRules": [{"Condition": {"KeyPrefixEquals": "out/affiliate"},"Redirect": {"HostName": "affiliatesite.com","HttpRedirectCode": "302","Protocol": "https","ReplaceKeyPrefixWith": "?params=..."}},{"Condition": {"KeyPrefixEquals": "out/affiliate/"},"Redirect": {"HostName": "affiliatesite.com","HttpRedirectCode": "302","Protocol": "https","ReplaceKeyPrefixWith": "?params=..."}}]} - (Optional) Push the bucket policy file to all site buckets:12aws s3api put-bucket-website --bucket example.com --website-configuration file://redirect.jsonaws s3api put-bucket-website --bucket static.example.com --website-configuration file://redirect.json

- Sync the production folder to (both of) the S3 buckets:123cd production/aws s3 sync . s3://example.com --deleteaws s3 sync . s3://static.example.com --delete

- Enroll in the Cloudflare Email Routing service; enable

catch-all. - Add or replace MX and TXT records in the DNS settings.

- Send a test email to

catchme@example.comto verify that email forwarding works. - Update Cloudflare DNS

CNAMErecords to point to the corresponding S3 buckets:- CNAME: example.com →

example.com.s3-website-us-west-2.amazonaws.com - CNAME: static →

static.example.com.s3-website-us-west-2.amazonaws.com

- CNAME: example.com →

- Clear the Cloudflare cache: Purge Everything. Here is a shell script:12345678910#!/bin/bashset -o allexportsource .envset +o allexportcurl -X POST "https://api.cloudflare.com/client/v4/zones/$ZONE_ID/purge_cache" \-H "X-Auth-Email: $EMAIL" \-H "Authorization: Bearer $API_KEY" \-H "Content-Type: application/json" \--data '{"purge_everything":true}'

- Test URL redirection; test affiliate links, etc.

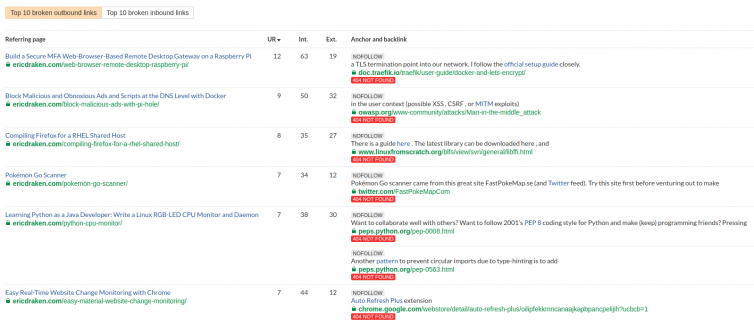

- Check for broken outbound links and fix or remove:

- Back up the running SQL database. Please see my Stack Overflow post for a detailed explanation.123docker-compose exec db sh -c '\mysqldump -uroot -p$MYSQL_ROOT_PASSWORD --all-databases --routines --triggers \' | gzip -c > ../sql/backup-`date '+%Y-%m-%d'`.sql.gz

- Cancel your GoDaddy hosting account.

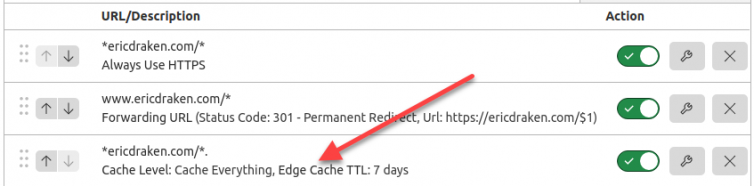

- Set Cloudflare Edge Cache rules.

The GoDaddy Experience Simulator

Friends, GoDaddy’s cachet from the dot-com boom has long faded. Since the founder sold his majority stake in 2011, it has transformed into a profit grinder—built on aging hardware, cobbled-together third-party portals, and a mess of upselling. GoDaddy is a remnant of a bygone era, in a landscape where AWS, Linode, and Vultr (to name a few) treat hosting as their core business.

Here is a video of me trying to cancel my hosting account. This is me in real time, struggling through the broken GoDaddy interface. You’ll see that many pages are non-functional, so I had to reverse-engineer the JavaScript in the browser console to bypass the “chat with an agent first” blocker—and jump straight to the satisfying denouement.

GoDaddy, just… ByeDaddy.

Did you experience how broken and painful the GoDaddy backend is?

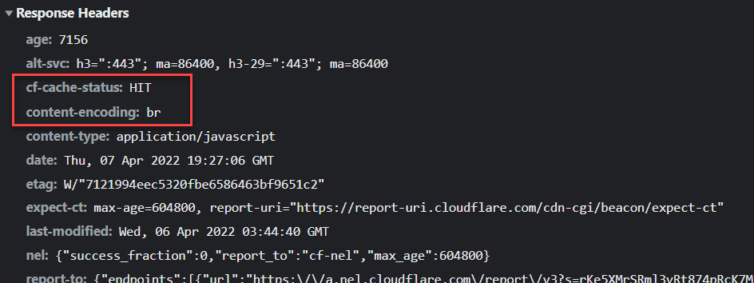

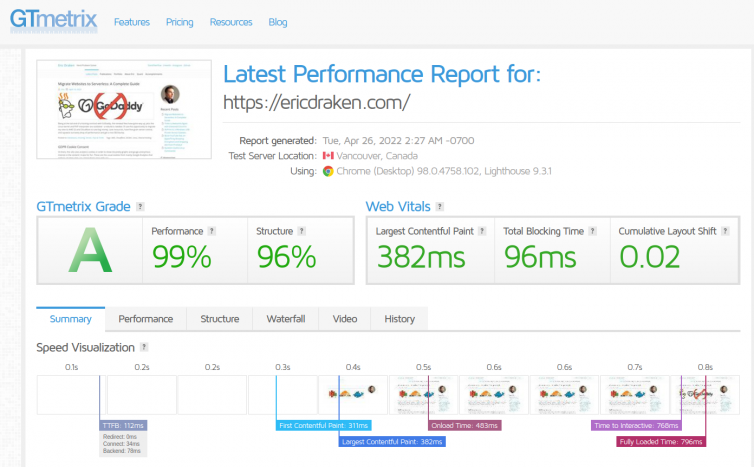

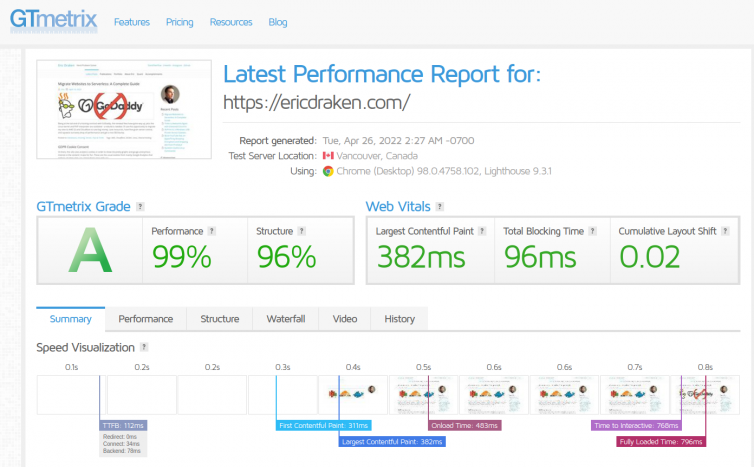

Results and Summary

Hello from S3. I’ve moved ericdraken.com to S3 + Cloudflare, and you’re reading this from Cloudflare’s cache with S3 as the origin. I expect to pay about 12 cents a month to host this site. Let’s see how fast it loads:

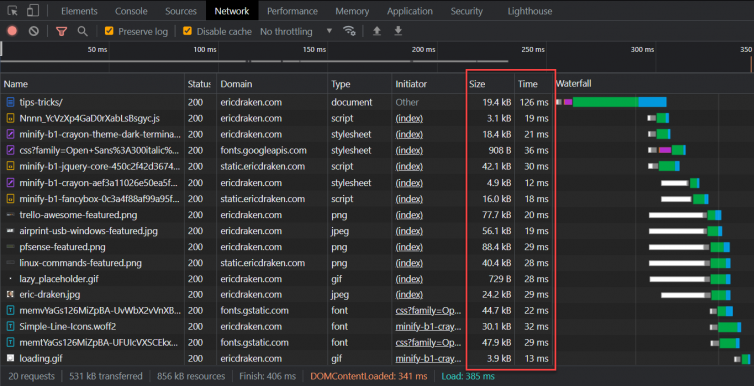

The waterfall and timings look beautiful, too.

Success

When you consider that a WordPress site with a heavy theme and dozens of plugins can take 5–8 seconds to load under Apache and PHP, achieving sub-one-second load times feels nearly miraculous.

Notes:

- SRE = Site Reliability Engineer ↩

- You probably didn’t consider that serverless buckets return content uncompressed, which can actually slow down your site. ↩

- Shard = splitting your website across multiple repositories. ↩

- 404 errors from this tracking pixel still show up in logs. ↩

- A nasty technique is to skip waiting for

ACKresponses before launching the next salvo. ↩