Unblock Google Analytics: Prevent AdBlockers from Blocking Site Analytics

I show you how I (used to) un-adblock Google Analytics to get full visitor insights on my pages through some zany techniques. I thought about patenting these, but it’s more fun to share. I can defeat my own techniques, and the community will likely catch up. Until then, we can enjoy visitor analytics despite regex-based adblockers and DNS blockers (e.g. Pi-Hole).

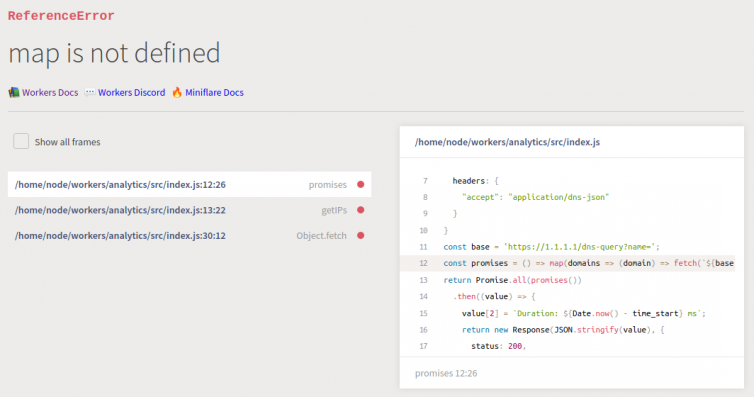

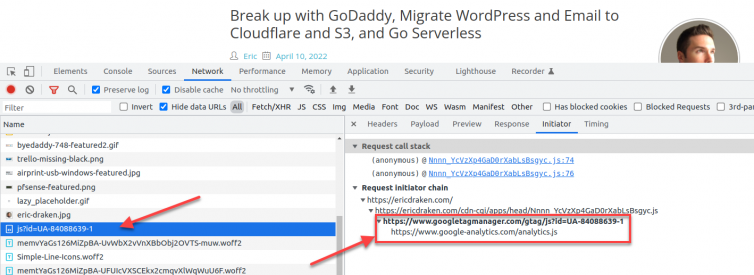

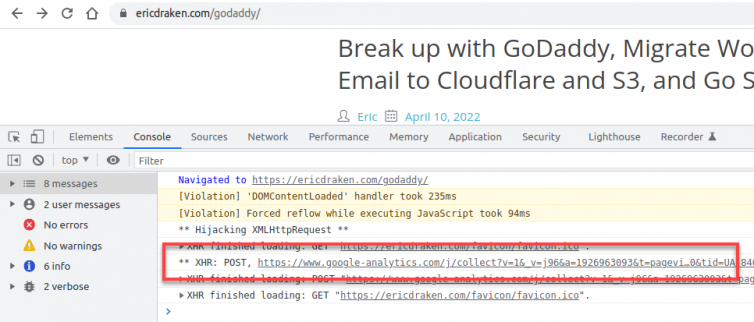

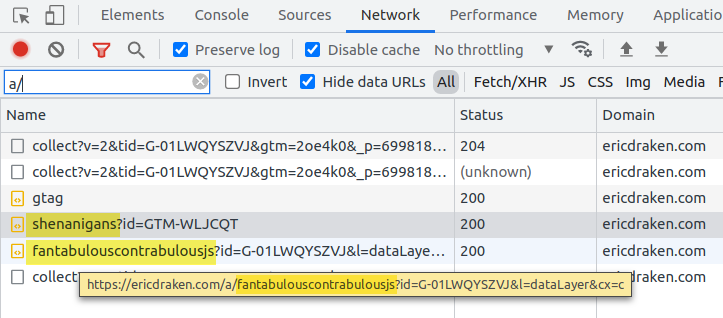

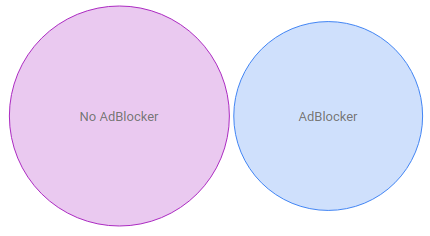

Consider the following network traffic:

I use adblockers in the browser and at the DNS level with Pi-Hole and pfSense. Millions of people do as well because ads are abnoxious and even malicious.

However, I’m amenable to a website collecting anonymous visitor behaviour information with Google Tag Manager. I use it. But, it’s blocked in uBlock and pfSense as a well-known tracking domain. I’ll explore how to prevent Google Tag Manager from being blocked.

Obviously, we need to cloak or hide the googletagmanager.com domain (and more). But,

My websites are serverless, so server-side scripts cost money per execution1.

I cannot host a server-side script in PHP or ASPX or Node because I do not have a server. What are some ideas to unblock Google Tag Manager and Google Analytics?

Replace the Domain with an IPv4?

We can replace the domain with the IPv4 address in theory, but that only bypasses regex-based browser blockers and DNS-lookup blockers; pfSense and Pi-Hole also use community lists that block IP addresses in a game of whack-a-mole. Also, that domain may have many, many A and AAAA records that change.

Host header needs to be set which is impossible (for the bulk of visitors) since 2015. Also, HTTPS will fail because an IP address is not in the certificate SAN.Replace the Domain with an IPv6?

In North America, virtually everyone I know has IPv6 disabled in their router, PC, Linux server, you name it. While replacing the domain with the IPv6 address would bypass the IPv4 community blocklists, too many people would naturally block GTM.

Copy and Paste the Core Google Tag Manager JavaScript?

I like your thinking. However, even though the GTM JavaScript doesn’t change very often, it does change. Also, the script needs to phone home via a WebSocket or invisible pixel or XHR request or iframe. Those calls home will likely be blocked as they are obvious in plaintext. Replacing the domains with the IPs has the same problems as the previous ideas.

googleanalytics.com are still in the client-side code.Proxy the Google Tag Manager Communication Through a Worker?

Preprocessing the GTM and Analytics scripts server-side in an AWS Lambda or Cloudflare Worker would allow the whole Google Tag Manager core to be fetched, deobfuscated, and have offending domains replaced with Lambda endpoints that proxy the requests to Google (via XHR or invisible pixels, not WebSockets). The co-opted GTM code would then be cached and sent client-side. No one would be the wiser.

Proxy the Google Tag Manager Communication Through Redirects?

How about co-opting the GTM code with the previous idea, but replacing the outgoing Google domains with a non-existent path(s) on the same website that redirects the XHR or pixel requests back to Google? Let’s see if that would even work.

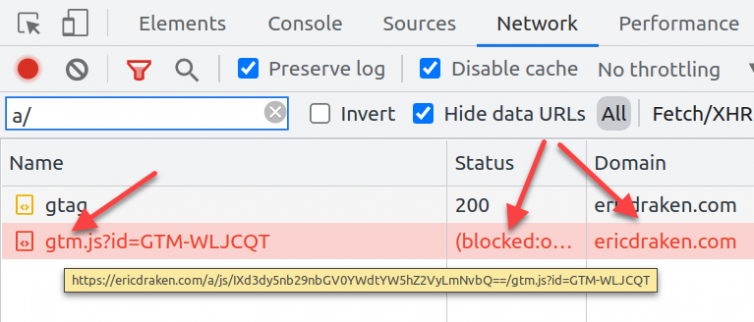

First, I’ll whitelist .googletagmanager.com.

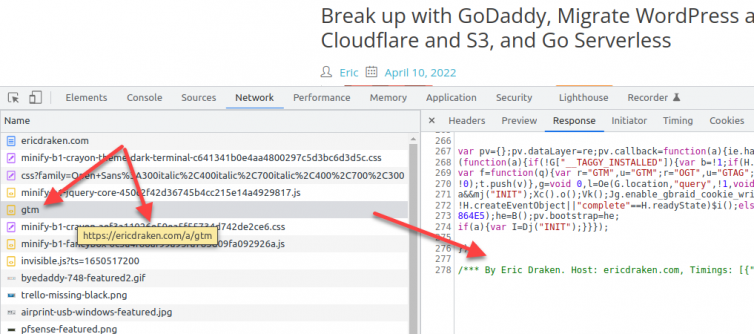

Now, let’s see if GTM loads:

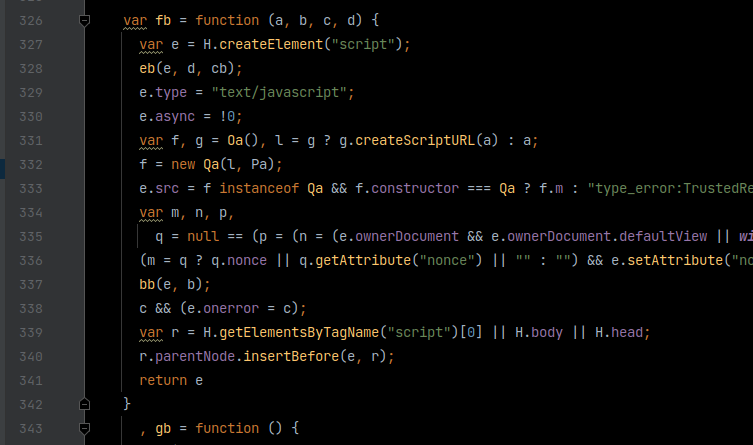

We find a lot of minified code:

1 2 3 4 5 6 7 8 9 10 11 12 13 | var R={sb:"_ee",Gc:"_syn_or_mod",zf:"_def",ck:"_uei",dk:"_upi",Qb:"_eu",Zj:"_pci",ag:"_is_passthrough_cid",$f:"_is_linker_valid",He:"_ntg",xd:"_nge",Mb:"event_callback",gd:"event_timeout",Ca:"gtag.config",Ia:"gtag.get",qa:"purchase",Jb:"refund",lb:"begin_checkout",Gb:"add_to_cart",Hb:"remove_from_cart",Ch:"view_cart",Bf:"add_to_wishlist",ra:"view_item",Ib:"view_promotion",ce:"select_promotion",be:"select_item",nb:"view_item_list",Af:"add_payment_info",Bh:"add_shipping_info",Ra:"value_key",ab:"value_callback", X:"allow_ad_personalization_signals",Bc:"restricted_data_processing",jc:"allow_google_signals",wa:"cookie_expires",Lb:"cookie_update",Cc:"session_duration",md:"session_engaged_time",ed:"engagement_time_msec",Fa:"user_properties",U:"transport_url",ba:"ads_data_redaction",ya:"user_data",wc:"first_party_collection",D:"ad_storage",K:"analytics_storage",sf:"region",tf:"wait_for_update",va:"conversion_linker",Ja:"conversion_cookie_prefix",ia:"value",da:"currency",Vf:"trip_type",Z:"items",Mf:"passengers", fe:"allow_custom_scripts",rb:"session_id",Rf:"quantity",fb:"transaction_id",eb:"language",dd:"country",cd:"allow_enhanced_conversions",me:"aw_merchant_id",je:"aw_feed_country",ke:"aw_feed_language",ie:"discount",T:"developer_id",Jf:"global_developer_id_string",Hf:"event_developer_id_string",nd:"delivery_postal_code",te:"estimated_delivery_date",qe:"shipping",xe:"new_customer",ne:"customer_lifetime_value",se:"enhanced_conversions",ic:"page_view",la:"linker",M:"domains",Ob:"decorate_forms",Gf:"enhanced_conversions_automatic_settings", Jh:"auto_detection_enabled",ve:"ga_temp_client_id",de:"user_engagement",wh:"app_remove",xh:"app_store_refund",yh:"app_store_subscription_cancel",zh:"app_store_subscription_convert",Ah:"app_store_subscription_renew",Dh:"first_open",Eh:"first_visit",Fh:"in_app_purchase",Gh:"session_start",Hh:"allow_display_features",Qa:"campaign",kc:"campaign_content",mc:"campaign_id",nc:"campaign_medium",oc:"campaign_name",qc:"campaign_source",sc:"campaign_term",ja:"client_id",ka:"cookie_domain",Kb:"cookie_name",Za:"cookie_path", Ka:"cookie_flags",uc:"custom_map",kd:"groups",Lf:"non_interaction",Sa:"page_location",ye:"page_path",La:"page_referrer",Ac:"page_title",xa:"send_page_view",qb:"send_to",Dc:"session_engaged",vc:"euid_logged_in_state",Ec:"session_number",Zh:"tracking_id",hb:"url_passthrough",Nb:"accept_incoming",zc:"url_position",Pf:"phone_conversion_number",Nf:"phone_conversion_callback",Of:"phone_conversion_css_class",Qf:"phone_conversion_options",Uh:"phone_conversion_ids",Th:"phone_conversion_country_code",ob:"aw_remarketing", he:"aw_remarketing_only",ee:"gclid",Ih:"auid",Oh:"affiliation",Ff:"tax",pe:"list_name",Ef:"checkout_step",Df:"checkout_option",Ph:"coupon",Qh:"promotions",Ea:"user_id",Xh:"retoken",Da:"cookie_prefix",Cf:"disable_merchant_reported_purchases",Nh:"dc_natural_search",Mh:"dc_custom_params",Kf:"method",Yh:"search_term",Lh:"content_type",Sh:"optimize_id",Rh:"experiments",cb:"google_signals"}; R.jd="google_tld";R.pd="update";R.ue="firebase_id";R.xc="ga_restrict_domain";R.fd="event_settings";R.oe="dynamic_event_settings";R.Pb="user_data_settings";R.Tf="screen_name";R.Ae="screen_resolution";R.pb="_x_19";R.$a="enhanced_client_id";R.hd="_x_20";R.we="internal_traffic_results";R.od="traffic_type";R.ld="referral_exclusion_definition";R.yc="ignore_referrer";R.Kh="content_group";R.sa="allow_interest_groups";R.ze="redact_device_info",R.If="geo_granularity";var Kc={};R.Yf=Object.freeze((Kc[R.Af]=1,Kc[R.Bh]=1,Kc[R.Gb]=1,Kc[R.Hb]=1,Kc[R.Ch]=1,Kc[R.lb]=1,Kc[R.be]=1,Kc[R.nb]=1,Kc[R.ce]=1,Kc[R.Ib]=1,Kc[R.qa]=1,Kc[R.Jb]=1,Kc[R.ra]=1,Kc[R.Bf]=1,Kc));R.Ce=Object.freeze([R.X,R.jc,R.Lb]);R.ii=Object.freeze([].concat(R.Ce));R.De=Object.freeze([R.wa,R.gd,R.Cc,R.md,R.ed]);R.ji=Object.freeze([].concat(R.De)); var Lc={};R.$d=(Lc[R.D]="1",Lc[R.K]="2",Lc);var Nc={Fi:"CA",zj:"CA-BC"};var Oc={},Pc=function(a,b){Oc[a]=Oc[a]||[];Oc[a][b]=!0},Qc=function(a){for(var b=[],c=Oc[a]||[],d=0;d<c.length;d++)c[d]&&(b[Math.floor(d/6)]^=1<<d%6);for(var e=0;e<b.length;e++)b[e]="ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789-_".charAt(b[e]||0);return b.join("")},Uc=function(){for(var a=[],b=Oc.GA4_EVENT||[],c=0;c<b.length;c++)b[c]&&a.push(c);return 0<a.length?a:void 0};var S=function(a){Pc("GTM",a)};var Vc=new function(a,b){this.m=a;this.defaultValue=void 0===b?!1:b}(1933);var Xc=function(){var a=Wc,b="Xe";if(a.Xe&&a.hasOwnProperty(b))return a.Xe;var c=new a;a.Xe=c;a.hasOwnProperty(b);return c};var Wc=function(){var a={};this.m=function(){var b=Vc.m,c=Vc.defaultValue;return null!=a[b]?a[b]:c};this.o=function(){a[Vc.m]=!0}};var Yc=[];function Zc(){var a=ab("google_tag_data",{});a.ics||(a.ics={entries:{},set:$c,update:ad,addListener:bd,notifyListeners:cd,active:!1,usedDefault:!1,usedUpdate:!1,accessedDefault:!1,accessedAny:!1,wasSetLate:!1});return a.ics} function $c(a,b,c,d,e,f){var g=Zc();g.usedDefault||!g.accessedDefault&&!g.accessedAny||(g.wasSetLate=!0);g.active=!0;g.usedDefault=!0;if(void 0!=b){var l=g.entries,m=l[a]||{},n=m.region,p=c&&k(c)?c.toUpperCase():void 0;d=d.toUpperCase();e=e.toUpperCase();if(""===d||p===e||(p===d?n!==e:!p&&!n)){var q=!!(f&&0<f&&void 0===m.update),r={region:p,initial:"granted"===b,update:m.update,quiet:q};if(""!==d||!1!==m.initial)l[a]=r;q&&G.setTimeout(function(){l[a]===r&&r.quiet&&(r.quiet=!1,dd(a),cd(),Pc("TAGGING", 2))},f)}}}function ad(a,b){var c=Zc();c.usedDefault||c.usedUpdate||!c.accessedAny||(c.wasSetLate=!0);c.active=!0;c.usedUpdate=!0;if(void 0!=b){var d=ed(c,a),e=c.entries,f=e[a]=e[a]||{};f.update="granted"===b;var g=ed(c,a);f.quiet?(f.quiet=!1,dd(a)):g!==d&&dd(a)}}function bd(a,b){Yc.push({Re:a,Oi:b})}function dd(a){for(var b=0;b<Yc.length;++b){var c=Yc[b];pa(c.Re)&&-1!==c.Re.indexOf(a)&&(c.Wg=!0)}} function cd(a,b){for(var c=0;c<Yc.length;++c){var d=Yc[c];if(d.Wg){d.Wg=!1;try{d.Oi({consentEventId:a,consentPriorityId:b})}catch(e){}}}}function ed(a,b){var c=a.entries[b]||{};return void 0!==c.update?c.update:c.initial} var fd=function(a){var b=Zc();b.accessedAny=!0;return ed(b,a)},gd=function(a){var b=Zc();b.accessedDefault=!0;return(b.entries[a]||{}).initial},hd=function(a){var b=Zc();b.accessedAny=!0;return!(b.entries[a]||{}).quiet},id=function(){if(!Xc().m())return!1;var a=Zc();a.accessedAny=!0;return a.active},jd=function(){var a=Zc();a.accessedDefault=!0;return a.usedDefault},kd=function(a,b){Zc().addListener(a,b)},ld=function(a,b){Zc().notifyListeners(a,b)},md=function(a,b){function c(){for(var e=0;e<b.length;e++)if(!hd(b[e]))return!0; ... |

We unminify and inspect it to find the outgoing URLs or domains.

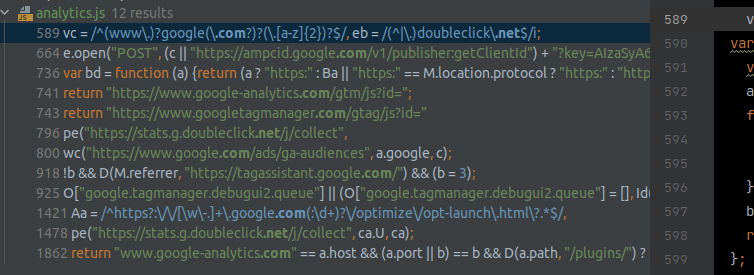

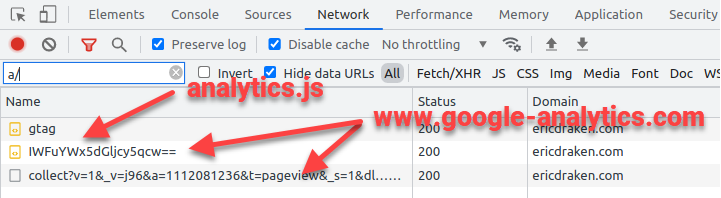

Guess what? All this script did was load another script: analytics.js. Be sure to whitelist:

Here is a serach for the outgoing domains in the script.

The requests back to Google are often POST requests. Here is an example of a page-load event:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | fetch("https://www.google-analytics.com/j/collect?v=1&_v=j96&a=583843643&t=pageview&_s=1&dl=https%3A%2F%2Fericdraken.com%2F&ul=en-us&de=UTF-8&dt=Eric%20Draken%20-%20Hard-Problem%20Solver&sd=24-bit&sr=1920x1080&vp=1905x348&je=0&_u=YEBAAUBBAAAAAC~&jid=379366183&gjid=214964093&cid=1644775999.1650408590&tid=UA-83087639-1&_gid=1704544202.1650419590&_r=1>m=2ou4i1&z=1464828436", { "headers": { "accept": "*/*", "accept-language": "en-US,en;q=0.9", "cache-control": "no-cache", "content-type": "text/plain", "pragma": "no-cache", "sec-ch-ua": "\" Not A;Brand\";v=\"99\", \"Chromium\";v=\"98\", \"Google Chrome\";v=\"98\"", "sec-ch-ua-mobile": "?0", "sec-ch-ua-platform": "\"Linux\"", "sec-fetch-dest": "empty", "sec-fetch-mode": "cors", "sec-fetch-site": "cross-site", "Referer": "https://ericdraken.com/", "Referrer-Policy": "strict-origin-when-cross-origin" }, "method": "POST" }); |

Host header on a dedicated server would work fine; the IPs for Google Analytics are virtual-hosted, so a Host header is needed. Requests to the IPs fail silently because Google Tag Manager hides browser errors very well.Perform DNS Lookups then Replace Domains with IPs?

Let me try an experiment…

Now, I’m behind pfSense so all DNS requests go through Unbound and I cannot dig @8.8.8.8, for example. I’ll perform a DNS lookup on my phone while specifying DNS servers (like 8.8.8.8 and 1.1.1.1) and reproduce the results below:

- 142.250.217.78

- 142.250.69.206

- 142.250.217.78

- 142.250.69.206

- 142.250.81.206

- 142.251.40.174

- 142.250.64.110

We see that the CNAME for www.google-analytics.com flattens to an IP that varies across time with a short TTL. It’s conceivable that if a Worker or Lambda could perform the DNS lookup itself and replace domains with IPs, we will sail past regex and blocklists.

Host header is needed.A Strategy to Rewrite Analytics URLs

Let’s brainstorm ways to prevent Google Tag Manager from being blocked.

- We could search for

XMLHttpRequestand hook into those objects.- We cannot set a

Hostheader since 2015.

- We cannot set a

- We could search for

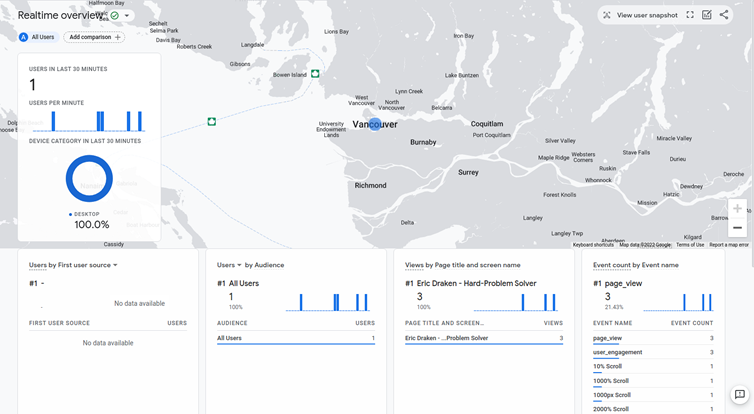

https://and rewrite that/those to just/gtm/https/. This would make all outgoing requests hit https://ericdraken.com/gtm/ which is a local domain. From there, a Worker can proxy thePOSTto the remaining URL path (converted back to a URL).- Such a proxy Worker will get blasted and the quota will get depleted quickly.

- Workers allows 100,000 calls per day, 10ms per execution.

- Lambdas charges per millisecond of execution.

Let’s pull on one of these threads some more. Given a URL like

https://ericdraken.com/gtm/

The event URL can be reconstructed. Could this still be blocked by regex lists? Maybe. However, it would be easy to make an S3 redirect rule like:

1 2 3 4 5 6 7 8 9 10 11 12 | "RoutingRules": [ { "Condition": { "KeyPrefixEquals": "gtm/https/www.google-analytics.com" }, "Redirect": { "HostName": "www.google-analytics.com", "HttpRedirectCode": "302", "Protocol": "https", "ReplaceKeyPrefixWith": "" } }] |

To avoid regex blocking in the browser, if we hook into the XMLHttpRequest protoype for open(), we could encode the www.google-analytics.com domain like so:

https://ericdraken.com/gtm/

A modification to the S3 redirect would then be:

1 2 3 4 5 6 7 8 9 10 11 12 | "RoutingRules": [ { "Condition": { "KeyPrefixEquals": "gtm/wgac" }, "Redirect": { "HostName": "www.google-analytics.com", "HttpRedirectCode": "302", "Protocol": "https", "ReplaceKeyPrefixWith": "" } }] |

Server-Side Analytics Proxying with AWS Lambdas

Why aren’t Lambdas my go-to for Serverless dynamicism? AWS Lambdas are cold-start, meaning they respond slowly. You can pay more for warm-start, but it’s a big jump.

But, do we need zippy analytics communication? Suppose we fire an analytics event to a cold-start Lambda and it takes 5 seconds to warm up and process, do we care? No. We can fire and forget. In that case, let’s look at AWS Lambda pricing again.

AWS Lambda Pricing

Duration cost depends on the amount of memory you allocate to your function. You can allocate any amount of memory to your function between 128 MB and 10,240 MB, in 1 MB increments. (ref)

We have a choice of ARM or x86 architectures. Honestly, the Lambda pricing is a Gong Show so I will focus on the most significant metric to ballpark the cost of this strategy.

| Memory (MB) | Price per 1ms |

|---|---|

| 128 | $0.0000000021 |

If we use a light language like JavaScript, proxy and ignore the response, and essentially treat analytic events like UDP broadcasts, then we can write a damn-fast Lambda proxy and maybe pay nothing?

Let’s see.

5 ms per request * 1000 events/day * 31 days = 155,000 ms

That’s just under 3 Lambda-minutes. Then, the pricing would be:

155,000 ms * $0.0000000021/ms = $0.0003255

That’s not even a penny. In fact, by reversing the free pricing limit, we get

$0.0049 = $0.0000000021/ms * x ms

where x is 2,333,333 ms, or 39 Lambda-minutes for free. That’s a lot for free!

Ok, let’s write a Lambda to proxy Google Tag Manager events to bypass adblockers.

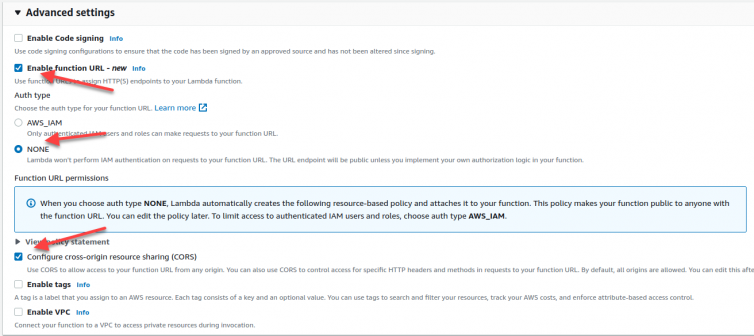

Exploring Lambdas

I’ll head over to the AWS web console and create a new Lambda with a public HTTPS endpoint.

I’ll code this in Python 3.9 on ARM architecture as a PoC. If it is slow, then I can come back and code it in Node (vanilla JavaScript is not available).

I end up with a public Lambda URL like this:

https://e4hjkz6trtubruicifxemin2kq0kdsyi

When I run the Lambda with the default hello-world Python 3 script, I get:

1 2 3 4 5 6 7 | import json def lambda_handler(event, context): return { 'statusCode': 200, 'body': json.dumps('Hello from Lambda!') } |

Test results

1 2 3 4 5 6 7 8 9 10 11 12 13 | Test Event Name GET Response { "statusCode": 200, "body": "\"Hello from Lambda!\"" } Function Logs START RequestId: b8389d5c-c6b1-4e2f-88dc-d4421dd816ab Version: $LATEST END RequestId: b8389d5c-c6b1-4e2f-88dc-d4421dd816ab REPORT RequestId: b8389d5c-c6b1-4e2f-88dc-d4421dd816ab Duration: 0.95 ms Billed Duration: 1 ms Memory Size: 128 MB Max Memory Used: 36 MB Init Duration: 99.41 ms |

Nice. Now, we have a public Lambda that anyone can hit that runs some newer Python. It’s up to us to protect the Lambda with referrer checks and origin IP checks with some creativity. We can also see that I was just billed for 1 ms of Lambda time. Here is what we know so far:

- 36 MB of RAM was used to print “Hello from Lambda!”

- 100 ms was spent on warm-up (not billed).

- Execution took 0.95 ms.

- Billing was 1 ms.

Let me run this again and see what billing looks like:

1 2 3 4 5 6 7 8 9 10 11 12 13 | Test Event Name GET Response { "statusCode": 200, "body": "\"Hello from Lambda!\"" } Function Logs START RequestId: 67c886a6-17d5-4399-af61-333e5f8b91e7 Version: $LATEST END RequestId: 67c886a6-17d5-4399-af61-333e5f8b91e7 REPORT RequestId: 67c886a6-17d5-4399-af61-333e5f8b91e7 Duration: 1.03 ms Billed Duration: 2 ms Memory Size: 128 MB Max Memory Used: 36 MB |

There are big drawbacks with using Lambdas for hiding Google Analytics.

Google Analytics Proxying with a Cloudflare Worker

For this proof-of-concept, I’ll write some Worker code in vanilla Javascript. It is nice that we have breathing room with 10 ms Cloudflare gives us to run the warm-start Workers.

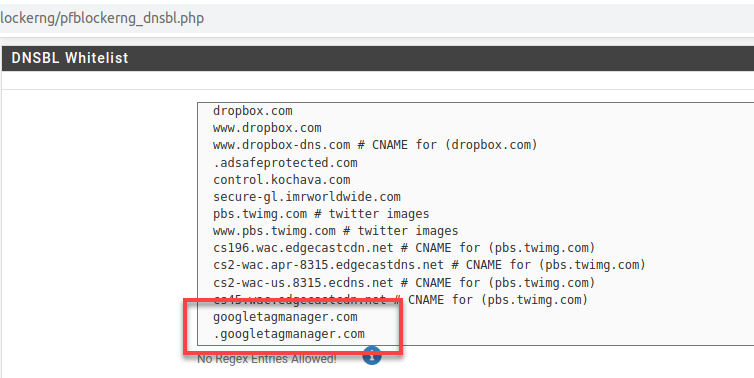

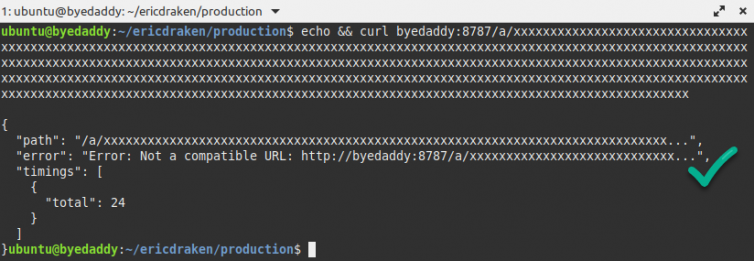

Host header cannot be set in XHR requests since about Firefox 43 (2015), so DNS lookups to replace a blocked domain with its IPv4 is a blind alley. I leave this section here because it is still neat. Feel free to skip this section, but all failures are life lessons.Programmatic DNS Lookups

Here is a simple DNS lookup script.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | export default { async fetch(request, env) { // curl // --http2 -H 'accept: application/dns-json' https://1.1.1.1/dns-query?name=www.google-analytics.com \ // --next // --http2 -H 'accept: application/dns-json' https://1.1.1.1/dns-query?name=www.googletagmanager.com const init = { headers: { "accept": "application/dns-json" } } const base = 'https://1.1.1.1/dns-query?name='; return Promise.all([ fetch(`${base}www.google-analytics.com`, init).then(value => value.json()), fetch(`${base}www.googletagmanager.com`, init).then(value => value.json()) ]) .then((value) => { return new Response(JSON.stringify(value), { status: 200, headers: { 'Content-Type': 'application/json' } }); }) .catch((err) => { return new Response(JSON.stringify({"err": err}), { status: 500, headers: { 'Content-Type': 'application/json' } }); }); } }; |

We see it calls the 1.1.1.1 DoH DNS lookup endpoint and returns some JSON information containing the A records we seek.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 | [ { "Status": 0, "Question": [ { "name": "www.google-analytics.com", "type": 1 } ], "Answer": [ { "name": "www.google-analytics.com", "type": 5, "TTL": 86221, "data": "www-google-analytics.l.google.com." }, { "name": "www-google-analytics.l.google.com", "type": 1, "TTL": 121, "data": "142.250.191.46" } ] }, { "Status": 0, "Question": [ { "name": "www.googletagmanager.com", "type": 1 } ], "Answer": [ { "name": "www.googletagmanager.com", "type": 5, "TTL": 86379, "data": "www-googletagmanager.l.google.com." }, { "name": "www-googletagmanager.l.google.com", "type": 1, "TTL": 279, "data": "142.250.72.200" } ] } ] |

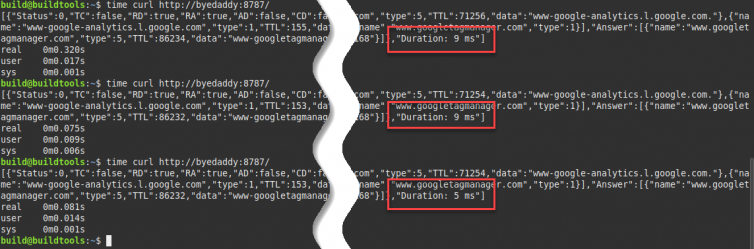

How long did this take?

1 2 3 4 5 6 | build@buildtools:~$ time curl http://byedaddy:8787/ [{"Status":0,"TC":false,"RD":true,"RA":true,"AD":false,"CD":false,"Question":[{"name":"www.google-analytics.com","type":1}],"Answer":[{"name":"www.google-analytics.com","type":5,"TTL":86178,"data":"www-google-analytics.l.google.com."},{"name":"www-google-analytics.l.google.com","type":1,"TTL":78,"data":"142.250.191.46"}]},{"Status":0,"TC":false,"RD":true,"RA":true,"AD":false,"CD":false,"Question":[{"name":"www.googletagmanager.com","type":1}],"Answer":[{"name":"www.googletagmanager.com","type":5,"TTL":86253,"data":"www-googletagmanager.l.google.com."},{"name":"www-googletagmanager.l.google.com","type":1,"TTL":153,"data":"142.251.46.200"}]}] real 0m0.095s user 0m0.015s sys 0m0.001s build@buildtools:~$ |

This doesn’t tell me much, so I modified the Worker to add timing information which I then ran a few times:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | export default { async fetch(request, env) { const time_start = Date.now(); const init = { headers: { "accept": "application/dns-json" } } const base = 'https://1.1.1.1/dns-query?name='; return Promise.all([ fetch(`${base}www.google-analytics.com`, init).then(value => value.json()), fetch(`${base}www.googletagmanager.com`, init).then(value => value.json()) ]) .then((value) => { value[2] = `Duration: ${Date.now() - time_start} ms`; return new Response(JSON.stringify(value), { status: 200, headers: { 'Content-Type': 'application/json' } }); }) .catch((err) => { }); } }; |

Performing two DNS lookups is fairly quick. Next, we have to get the Google Tag Manager code and Analytics code.

Phase One: Hijack Google Tag Manager

Before getting into a working script, here is the idea for coopting Google Tag Manager:

Again, IP-replacement is not a viable solution. What is valuable is the insight into the timings of portions of Worker scripts to see how we can stay under 10ms.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | "timings": [ { "function": "getGTMScript", "duration": 290 }, { "function": "getGoogleIPs", "duration": 33 }, { "function": "findScriptDomains", "duration": 7 }, { "function": "replaceScriptDomains", "duration": 0 }, { "total": "330 ms" } ] |

The most expensive operation so far is actually getting the source of the GTM script.

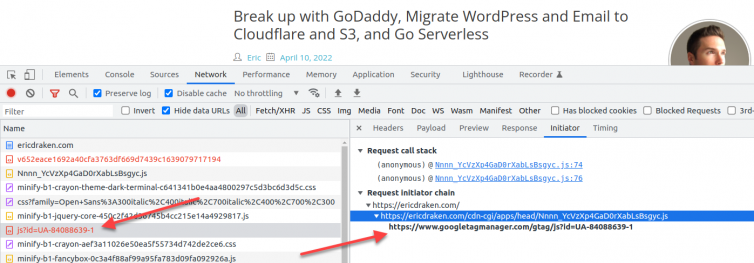

fetch of GTM. Please note that your site’s GTM ID is hard-coded in the gtm.js.Now look at the timings:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | [ { "function": "getGTMScript", "duration": 7 // <---- Much faster! }, { "function": "getGoogleIPs", "duration": 2 }, { "function": "replaceScriptDomains", "duration": 0 }, { "total": "9 ms" } ] |

Before continuing, let’s see if I can load GTM with my customizations from my own domain as a checkpoint.

1 2 | <!--/*** Server-Side Google Tag Manager ***/--> <script async src="/a/gtag"></script> |

Excellent.

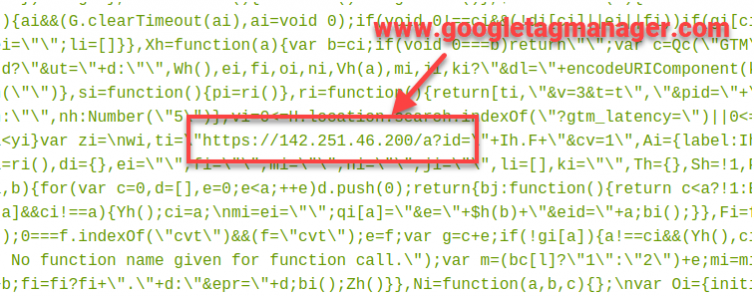

Gotcha: Inline Script Tags

Nice try, Eric. But just like shared servers, a request sent to a virtual-hosted IP must include the hostname or else the default virtual host may not be the intended service.

What happened here is that Google Tag Manager created a <script> tag and set the src to https://142.250.191.46/analytics.js which did not hit the service that provides the analytics script.

What can we do?

<script> src contents in the Worker and then load and eval() them in plaintext in the DOM.eval‘d Google Analytics code in my sites? My sites are serverless and not prone to XSS vulnerabilities.analytics.js? The canonical resource has a max-age of 7200 which means it is cached for 2 hours; we cannot be certain it doesn’t change often.Phase Two: Hijack Google Analytics Inline Scripts

With our interesting problem at hand, we need to coopt both the inline script mechanism and the XMLHttpRequest to rewrite some offending URLs.

This is the desired XMLHttpRequest hijacking which is as easy as paint-by-numbers by replacing the prototype.open:

1 2 3 4 5 6 7 8 | // Note: I prefer explicit arguments over the nebulous 'arguments' magic keyword. !(function (open) { console.log('** Hijacking XMLHttpRequest **'); window.XMLHttpRequest.prototype.open = function (method, url, async, user, password) { console.log(`** XHR: ${method}, ${url}`); open.apply(this, [method, url, async, user, password]); }; })(window.XMLHttpRequest.prototype.open); |

Rewrite Blocked Domains to Obfuscated Strings

Here is an idea of the concept:

Why this odd rewrite flow?

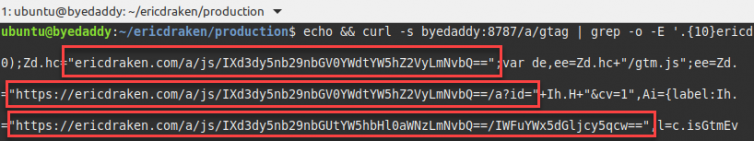

A script with the name analytics.js may be blocked by regex just like the infamous fingerprint2.js is regex blocked. Same for the www.google-analytics.com string in case the blocking regex is improperly written. Those telltale strings are base64-encoded. A known delimeter such as /js/ separates or prefixes those encoded strings. Any intermediary path segments and the query string sail through in the clear.

We can do even better.

Rewrite Blockable Strings to Encoded Strings

Here is the rewrite logic that I actually just in production:

To decode and fetch the URLs safely, the Worker listens on /a/* for JavaScript source files denoted by /a/js/. From there, every path segment is atob()-decoded. The result may be garbage if the segment is not base64-encoded, so we check the first character for the telltale, in this case a single !.

The plan is to keep a list of known blockwords like analytics.js and gtm.js and replace them in the GTM code. The same goes for blocked domains. Let’s see it in action:

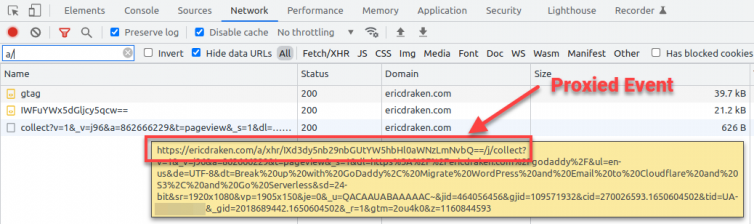

As we can see, gtag and analytics.js load via inline scripting. XHR requests are also being proxied. However, as a hint of things to come, it will be nice to tell the difference between inline <script src=..> requests, and XHR requests on page events.

Phase Three: Intercept Google Analytics XMLHttpRequests

Since 2015, XMLHttpRequest has been hardened against executing XSS exploits by forbidding certain header overrides.

Host header: it replaces certain domains with their recent DNS lookup IPs and “sets” the Host header.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | /** Client-side JS **/ export const xhrHijack = (dnsInfo) => (function (open) { // Confirm the structure of the dns info is an object if (typeof dnsInfo !== "object" || !dnsInfo) { console.error('** Unable to hijack XMLHttpRequest due to missing or bad dnsInfo **'); return; } console.log(`** Hijacking XMLHttpRequests for ${Object.keys(dnsInfo)} **`); const pattern = 'https?\\\:\\\/\\\/(' + Object.keys(dnsInfo).join("|").replace(/[-\/\\^$*+?.()[\]{}]/g, '\\$&') + ')'; const re = new RegExp(pattern, 'i'); window.XMLHttpRequest.prototype.open = function (method, url, async, user, password) { url = url.toLowerCase(); // Safety if (re.test(url)) { console.log(`** XHR before: ${method}, ${url} **`); for (const [domain, ip] of Object.entries(dnsInfo)) { if (url.includes(domain)) { url = url.replace(domain, ip); console.log(`** XHR after: ${method}, ${url} **`); open.apply(this, [method, url, async, user, password]); // Set the header after open() and before send() /******************************************************************* * ERROR: Host is now a forbidden header since Firefox 43 (2015)!! * *******************************************************************/ this.setRequestHeader('Host', domain); return; } } } // Passthru open.apply(this, [method, url, async, user, password]); }; })(window.XMLHttpRequest.prototype.open); |

Additionally, to make this work, I’d have to downgrade requests to HTTP because there is no SAN entry for an IP address.

In case you are wondering, no, we cannot ask XHR requests to go through an actual, commercial proxy (one with a password, etc.).

Let’s turn back to our old standby for bypassing CORS errors and proxying requests, next.

Phase Three Redux: Proxy XMLHttpRequests

What will work is proxying every Analytics event through a PHP script (Apache server) or ASPX script (IIS) for free. My websites are serverless, so server scripts cost a fee per invocation.

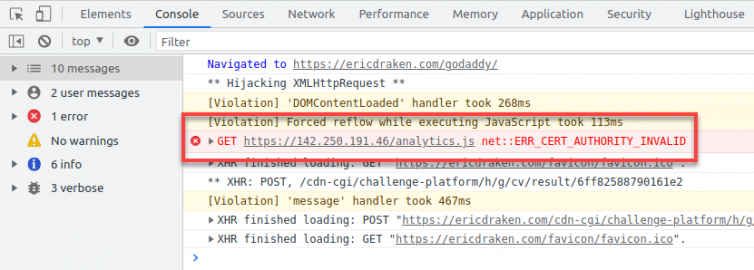

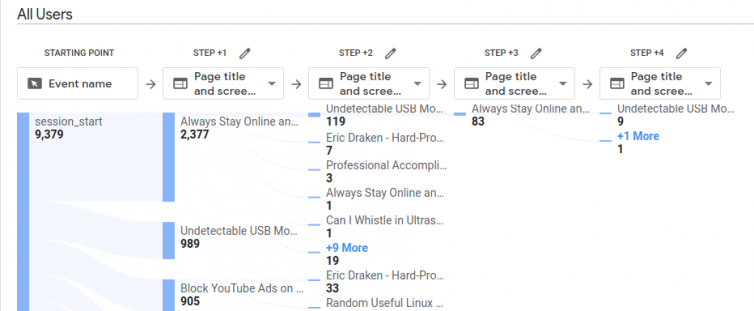

Here is what the results of such proxying look like:

Wonderful.

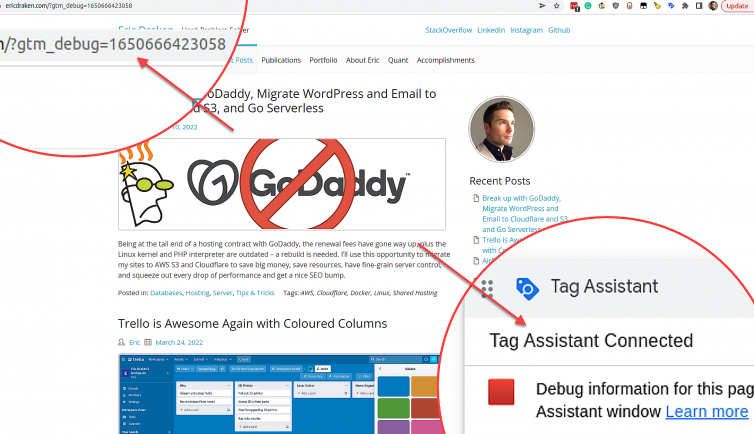

Gotcha: Google Tag Assistant’s Preview Mode

When you preview Tags, you enter into your website with flags and side-channel communications; this can play havoc with our proxy setup:

- For one, GTM needs to add

gtm_debug=to the page URL and also the inline GTM script.

- For another, GTM in Preview Mode will override the

dataLayerwindow object and also change the web property ID on you (for debugging). Still another, Preview Mode may inject the

gtag.jsorgtm.jsmultiple times, so we must place a guard around theXMLHttpRequesthijacker so it only inserts itself once.Yet another, in Preview Mode, we may have to proxy small images and CSS: we cannot take a guess at the content type but should be as robust as possible when proxying responses.

Penultimately, when coopting

gzip– or Brotli-compressed scripts, we must be sure to remove these compression-related headers because the proxied response to the client will be uncompressed. Not paying attention to this point may result in strange characters resulting in side effects that are hard to debug. Trust me.Finally, care must be taken to not allow random or bad input, or for hackers to use your Worker as a proxy service for bots. Also, prevent allowing URLs with thousands of characters that may overwhelm your Worker quota.

Gotcha: Web Application Firewall (WAF) Blocks Long Encoded Strings

And you thought this was going to be easy. WAF will drop requests with long, base-64 encoded strings because such URLs are telltale signs of an encoded web shell being operated, or someone trying to egress company information. Then, how to bypass adblockers and the WAF? Is this not entertaining of a problem? This gotcha and the next gotcha will be overcome, shortly.

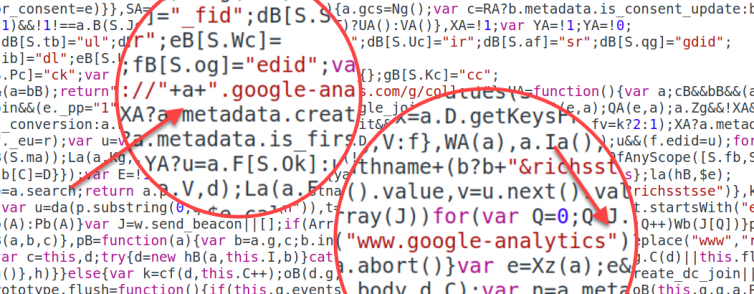

Gotcha: Dynamic URLs and Navigator.sendBeacon()

Consider the following code snippet:

We already rewrite the Google Analytics source to encode inline script tags, iframes, and tracking pixels. We also trap classic XHR requests. We’ll need to expand the XHR interceptor to rewrite some dynamic URLs on the fly (e.g. https://+a+google-analytics.com).

Navigator.sendBeacon() as well as XMLHttpRequest to encode Google URLs and blockwords on the fly.Or do we?

Should we play whack-a-mole with emerging browser technologies (e.g. sendBeacon), or be future-proof and permanently bypass adblockers?

Future-Proof URL Encoding, or Fantabulous Shenanigans

Let’s use random words instead of base64 strings and bypass the WAF: the Web Application Firewall keys off large base64 strings and make a call to drop the connection or not. Here is what using random words looks like:

If you are zany like me, you can make the random words polymorphic so they do not repeat for a long time; use Cloudflare KV or an S3 bucket of words in “folders” denoted by the day of the month (1~31). Good luck, regex-based adblockers.

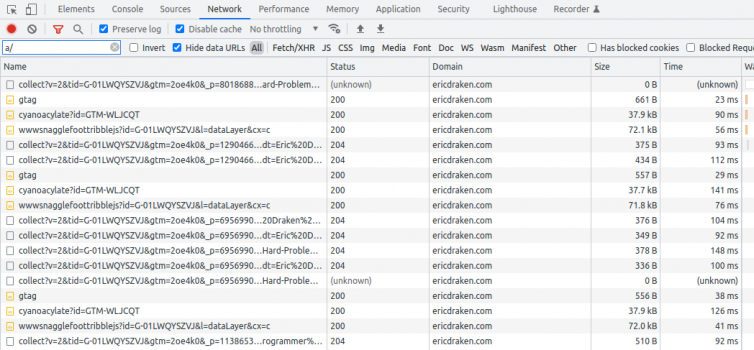

Here are similar, polymorphic URLs captured in DevTools:

Demonstration

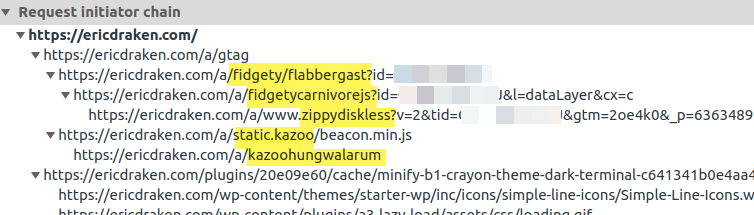

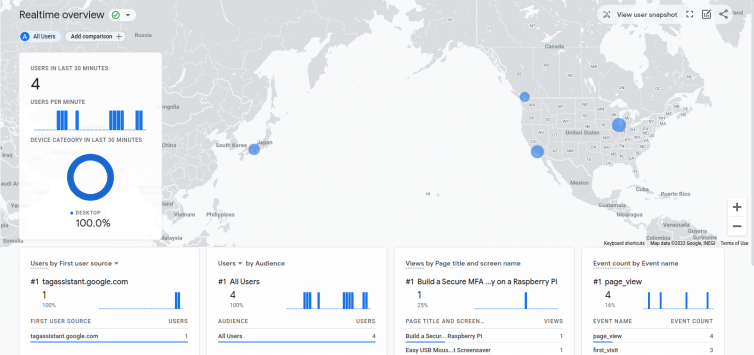

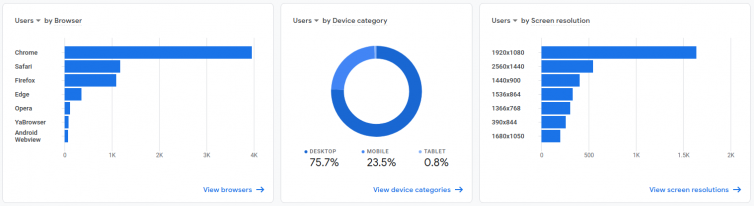

Here are some screenshots demonstrating this technique of preventing adblockers and DNS blockers from blocking Google Analytics.

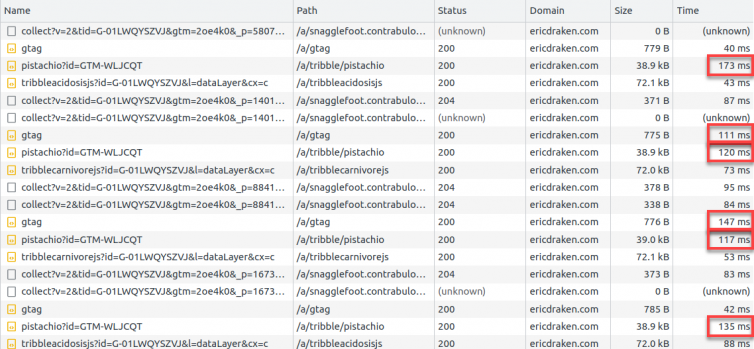

Bonus: Track Visitors Who Use Adblockers

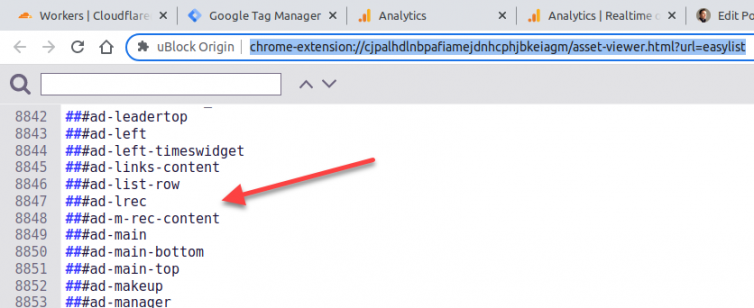

Allow me to demonatrate my technique of un-adblocking and un-DNS-blocking Google Analytics by testing if the visitor has an adblocker or not, and see if that person interacts with my site, regardless. First, what is a telltale sign of an ad? Let’s check out the blocklist in uBlock Origin:

Then, add some quick JavaScript to send an event when a RegEx adblocker is detected.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | // AdBlock detection event push for GA4 // Eric Draken export const adBlockTest = () => { document.addEventListener("DOMContentLoaded", function () { window.dataLayer = window.dataLayer || []; const test = document.createElement('div'); test.innerHTML = ' '; // Check chrome-extension://cjpalhdlnbpafiamejdnhcphjbkeiagm/asset-viewer.html?url=easylist test.className = 'ad-medium'; test.id = 'ad-medium'; document.body.appendChild(test); window.setTimeout(() => { if (test.offsetHeight === 0) { console.log('** AdBlock detected **'); window.dataLayer.push({'event': 'gtm.adblock'}); } else { console.log('No AdBlock detected'); } test.remove(); }, 400); }); }; |

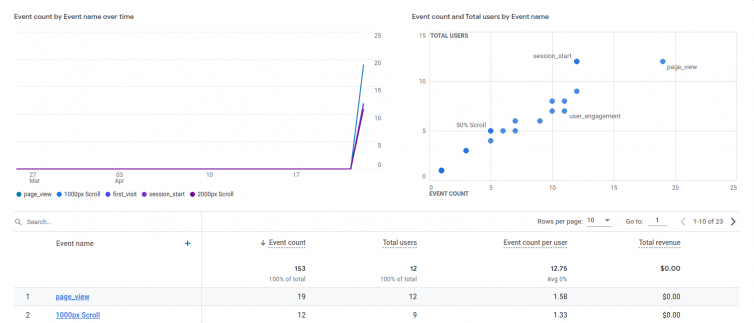

Results and Analytics

The results are exciting:

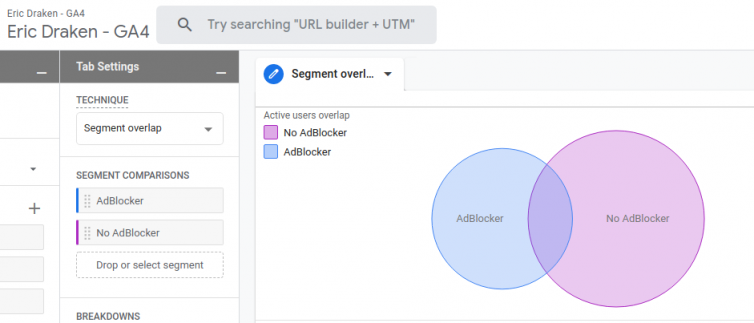

Note: The overlap is due to the adblocker-check script not being on 404 pages, yet.

Optimizations

Consider the following network graph:

You may be happy with 100ms for loading the gtag.js script on each page view with a 200 OK response, not 304 Not Modified, but I’m not. This is an artifact of not having ETag or timestamps in a Serverless website using Workers or Lambdas. We can overcome this with some clever Cloudflare KV store, or cache the Google scripts in S3 (but then we’d have to store S3 credentials in Cloudflare, which I am not prepared to do – total separation of concerns is desired.) For now, it’s a splinter in the mind which can be addressed, but it’s good enough for today.

Conclusion

Proxying isn’t new, but polymorphic-URL rewrites and recompiling Google Analytics scripts is a more clever mouse. That’s basically it: We rewrite obvious regex triggers inside Google scripts with polymorphic tricks to prevent regex-based adblockers from building a list of keywords against us.

Let’s look at this site, for example.

My site is just a fun, niche, nerd-out site, so I bet my visitors are savvy and use adblockers without a second thought – the above Venn diagram is evidence of that.

Update 2022-11-04:

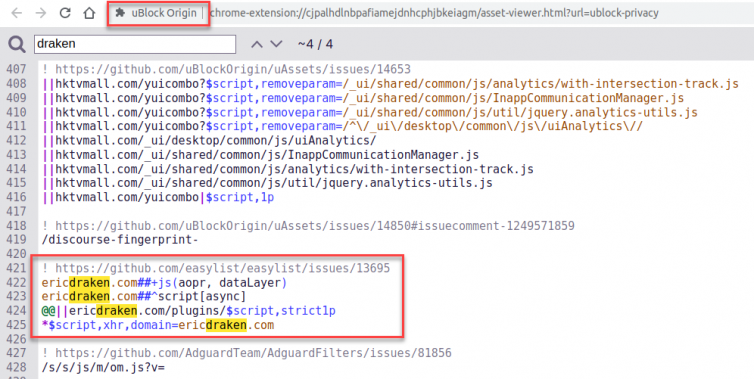

uBlock Origin and EasyList put out some neat filters just for me.

Let’s learn about adblocking and see what is going on here:

1 2 3 4 5 6 7 8 9 10 11 12 13 | ! https://github.com/easylist/easylist/issues/13695 ! Aborts execution of script (throws ReferenceError) when attempts to read specified property of dataLayer ericdraken.com##+js(aopr, dataLayer) ! Remove scripts which have the async attribute in the response payload ericdraken.com##^script[async] ! Don't block my domain scripts from my plugins folder @@||ericdraken.com/plugins/$script,strict1p ! *$script,domain=example.com: block all network requests to fetch script resources at example.com *$script,xhr,domain=ericdraken.com |

- They are trapping dataLayer so it blows up when it is read. Clever!

- They are removing all async scripts – usually analytics scripts use this.

- They made an effort to keep my site working.

- They are blocking all scripts and XHR requests – nuking the site by accident.

We had a chat, and the last rule is gone now. They’re pretty good people.

Notes:

- Fractions of a penny, with a generous per-day free amount ↩