I love what I do and the teams I work with. Here are some of my professional accomplishments and projects I’ve championed in reverse-chronological order.

2021

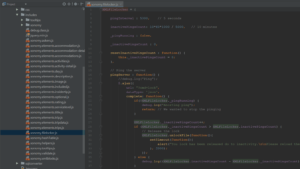

- Built a mirror-traffic test framework to stress-test on real gamer traffic (HAProxy, K8s)

- Migrated a multi-million-dollar system from Py2 to Py3 with extensive testing (Python, K8s)

- Designed a low-level fork-join mechanism to capture and playback live data (Python, C++)

- Implemented GitHub automation to streamline PR reviews (Python, GitHub)

- Refactored massive if-else code into structured modules for effective unit testing (Python)

- Completed a major distributed microservice project on time and deployed to AWS (AWS)

- Designed and implemented the impossible: Convert between YAML and JSON with comments (YAML, JSON)

2020

- Led a team of five through two major ML fraud-detection projects

- Migrated projects from manual AWS ECS deploys to Terraform scripts (AWS)

- Successfully launched a large system of microservices on AWS (AWS)

- Created a release management system for CI/CD of microservices and assets (GitHub)

- Implemented PCI-compliant controls into all aspects of development (GitHub Hooks)

2019

- Introduced Slack to our team for a massive uptick in productivity (Teambuilding)

- Organized a flash mob to celebrate our team (Teambuilding)

- Planned and implemented the AWS architecture for our new SaaS products (AWS, CF, S3, ECR)

- Transitioned the team from SVN to Git on Github Enterprise (Git, Gitflow)

- Introduced static-analysis (SonarQube) into our CI/CD pipeline (CI/CD, Static analysis)

- Set up comprehensive Unit Tests for 3rd-party vendor APIs (PHP, REST APIs, Linux)

- Implemented a company-wide lunch-time stretch program (2 years and counting)

- Completed the luxury train travel site CanadaVacations.com

2018

- Implemented PHP-to-Azure AD Single Sign-On to protect intranet sites (PHP, Azure AD, SAML)

- Migrated web sites to Cloudflare for speed and security (DNS, Cloudflare)

- Built a JS/CSS group-and-minify and parallel download system (PHP, JS, CSS)

- Built a cache management system (PHP, Linux)

- Built a non-WordPress lazy-load system (PHP, GD, JS, HTML)

- Implemented a modular package policy vs. one monolithic repo (PHP, Composer, Git)

- Built an automatic Azure AD signing token rollover updater (PHP, Azure AD)

- Migrated development process to Docker (Linux, Docker, VMWare)

- Implemented a policy of using PHP7.2 everywhere (PHP)

- Implemented automated continuous integration (CI) with Github (Git, Docker, Linux)

- Built an automated image-renamer in WordPress for SEO (WordPress, PHP)

2017

- Built an automated CSV/SQL data processing system (PHP, MySQL, Linux)

- Implemented global error reporting with Slack (PHP, Slack)

- Built and implemented a thumbnail generation API (PHP, PhantomJS, NodeJS)

- Built and implemented a wishlist system (HTML, JS, CSS, PHP, MySQL)

- Built an output buffering manipulation shim (PHP, HTML)

- Implemented an in-house GeoIP system for speed (PHP, Linux, Sqlite)

- Designed and implemented a typeahead search engine (PHP, HTML, JS, MySQL)

- Created an XML editor for non-technical users (JS, PHP, Git)

- Implemented GoAccess real-time web log analyzer (Linux, Apache)

- Created a web site visitor behavior tracker (JS, PHP, MySQL)

- Designed and built a smart, automatic PDF generator (PHP, ChromeJS, NodeJS, JS, Linux)

2016

- Discovered and cleaned out server hacks (Linux, PHP)

- Implemented better server security (Linux, PHP)

- Implemented reasonable penetration monitoring (Linux, Git)

- Set up an enterprise server with migration (Linux, MySQL, Apache, PHP)

- Implemented git teamwork practices (Git)

2019

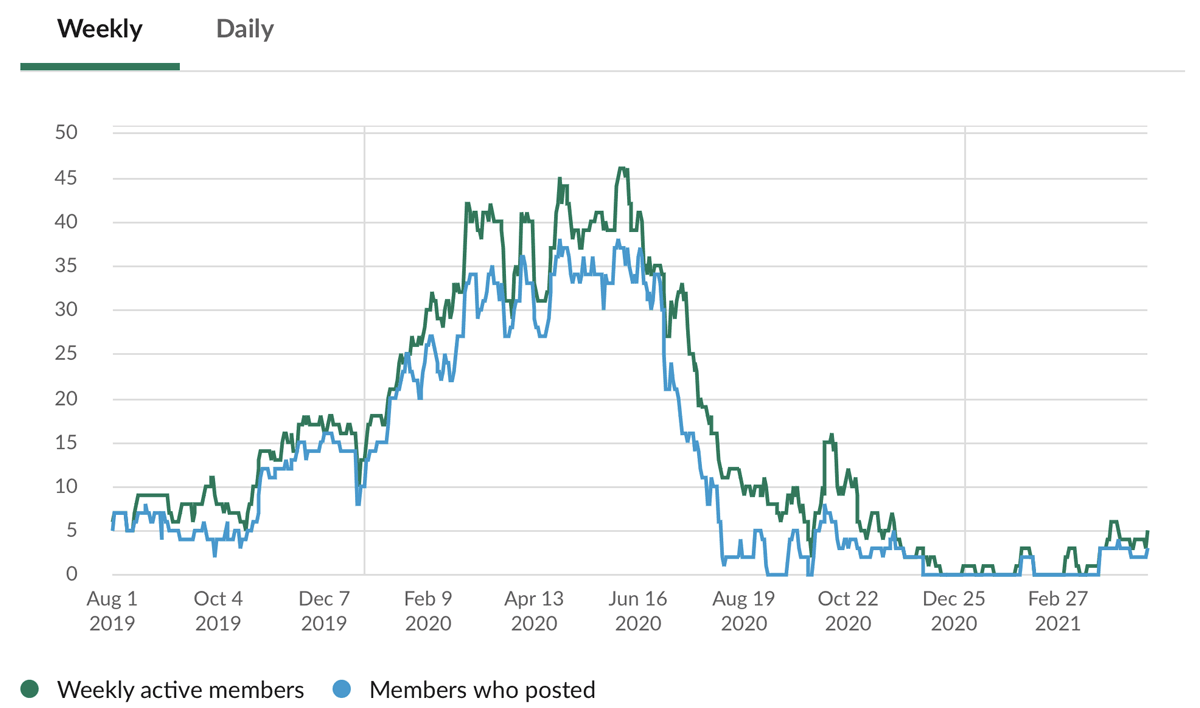

Introduced Slack to our team for a massive uptick in productivity

Slack, Teambuilding

When I started, we had no meaningful IM or communication system and no way to message other business units to collaborate or tap into the knowledge of colleagues on different floors. Also, the only way to build comradery and enjoy each other’s company at work was to walk up to someone’s desk.

Wanting to team build and increase the fun factor at work, I took it upon myself to create and administer a Slack workspace. For about one year we had the most productivity I’ve ever seen – we sent memes, chatted for short bouts, and most importantly were able to effectively share code snippets and screenshots, get CICD alerts, get Git PR notices, and get work done. Notice came down and we had to switch to Teams (and block Slack), but it was an amazing year with tremendous buy-in from my teammates.

Result: For nearly a year we had amazing communication, comradery, and engagement.

Organized a flash mob to celebrate our team

Teambuilding

Flash mobs were en vogue, so I organized another business unit to come to our business unit from all angles and cheer and celebrate us to my team’s complete surprise.

Result: A nice little recognition for how hard the team works.

Introduced static-analysis (SonarQube) into our CI/CD pipeline

CI/CD, Static analysis, SonarQube

We had a hiring blitz and took on many new team members with varied coding levels. First as a proof-of-concept, and then as a mission-critical system, I was able to introduce and champion the incorporation of a SonarQube static analysis server to break automatic builds on Quality Gate failure. This means that if source code has more than a few code smells, duplicated code, possible SQL-injection entry points, and the like, the build will fail and the CI/CD pipeline will thankfully break.

As a side effect, the team tries to meet SonarQube expectations as a point of pride! For example, they will strive for >80% code coverage for that green “A” in the dashboard.

Result: Coding quality significantly increased with drastically fewer code smells

Set up comprehensive Unit Tests for 3rd-party vendor APIs

PHP, REST APIs, Linux

Connecting to many third-party vendors’ APIs present some challenges to ensuring our code connects correctly. I was able to 1) convince the Stakeholders that we need to spend some cycles on creating robust Unit Tests, and 2) write several comprehensive and automated Unit Tests to ensure the API contracts are being fulfilled.

Result: Virtually eliminated the API QA work

Implemented a company-wide lunch-time stretch program

I came back from Japan in 2016 and started doing something at my desk called Radio Taiso (Japanese calisthenics) that big Japanese companies start the day with. Soon people wanted to join in. Then I began to explain to movements because the instructions are in Japanese. Eventually “taiso” became a company-wide event broadcast on the company monitors signaling lunchtime (and stretch time).

Result: Increased feeling of togetherness, less employee fatigue

Completed the luxury train travel site CanadaVacations.com

After nearly a year of project planning, user journey plotting, data architecting, data warehousing, gigabytes of digital asset management, real-time API communication, and reflows and redesigns, I unveil the completely new and fast CMS-backed luxury Canadian train travel web site CanadaVacations.com. I was involved in each stage of planning and designed and built 100% of the backend for speed and reliability.

Result: Completed a nearly year-long design and build

2018

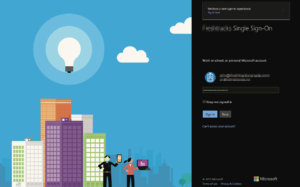

Implemented PHP-to-Azure AD Single Sign-On to protect intranet sites

PHP, Azure AD, SAML

In a nutshell, Apache’s .passwd was protecting some quite substantial systems with a single password shared by up to 60 people. It was keeping me up at night. I am really proud of this accomplishment because I had to prove to myself it could be done1, I had to develop a proof-of-concept to show others, and I had to push it as an agenda item to get it implemented. I’m talking about connecting our PHP scripts to our Azure Active Directory for single sign-on to access those scripts/pages. My vision: moving from using a single shared password, to using the individual email and password a staff member uses to log in to their PC and Outlook.

I dedicated myself to this special project, and worked overtime and at home again. I tested it thoroughly so it didn’t interfere with things like PHP sessions, and I added logging everywhere so I could know the system state in real-time. Azure AD is an enterprise system, so resources like StackOverflow weren’t helpful. I had to buckle down and go through the Microsoft resource pages and figure everything out myself. In the end, I build a beautiful encapsulated system that could be used on any PHP page in our intranet. I know this has helped greatly to keep us secure.

Result: Key systems protected by individual credentials

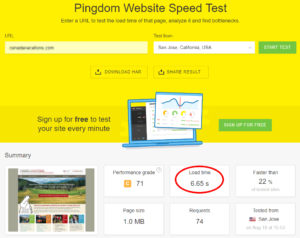

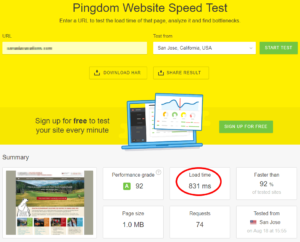

Migrated web sites to Cloudflare for speed and security

DNS, Cloudflare

When I joined my company, one of my very first recommendations was that we use Cloudflare for 1) edge caching, 2) blanket HTTPS, and 3) for WAF (web application firewall). The third reason was the most pressing at the time as we had just been hacked. I have previous experience with Cloudflare, so I already have my own custom API connectors for things like purging cache and managing security levels.

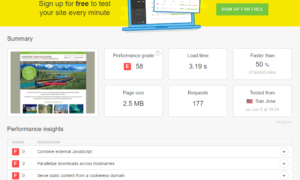

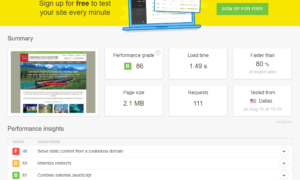

I had to convince a handful of people the merits of Cloudflare first, even going as far as quietly transferring our DNS management to Cloudflare for one of our lesser, unused sites. One executive meeting in our boardroom I asked if I could show a quick presentation. I showed Pingdom Test2 results from before and after using Cloudflare. Before our load time was about 6s3. After, 1s. That was it. Migrating to Cloudflare because part of the agenda4. Now our sites are protected by a modest WAF5, have DDoS protection, are faster, and use HTTPS everywhere.

Result: Web site speed increased

Built a JS/CSS group-and-minify and parallel download system

PHP, JS, CSS

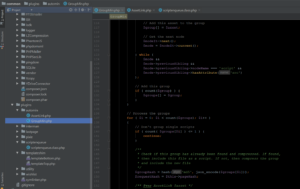

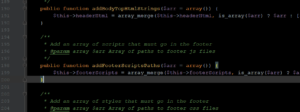

There was now some buzz about making the web sites even faster than with Cloudflare alone. Site speed is related to user experience, which is related to SERP6, which is inversely related to how much we spend on AdWords and PPC. Our primary web site was not scoring very well on Pingdom – a failing grade of 58. My task was to squeeze every drop of performance out of the site, without changing the site too much. With my tool alone7, I got the average page speed from 3.3s down to 1.5s.

I went back to my output buffer shim and added a DOM parser8 that groups neighboring JavaScript (and CSS) together, minifies it, and replaces the group with a single link. The result is reused on subsequent page loads.

Additionally, until HTTP/2 is adopted by all browsers, I could increase the parallel downloading of images and assets by using subdomains. My shim is capable of modifying the href and src URLs to use alternating subdomains with each asset. This means instead of only downloading 6 assets at a time on one hostname, this can go up to 17+9. This has dramatically improved performance. Until the code base is rewritten to include JS and CSS dependency management (like how WordPress does), this is our most important speed tool.

Result: Web site speed greatly increased

Built a cache management system

PHP, Linux

With a bigger system comes more maintenance. After migrating to Cloudflare, and building a group-and-minify tool, there are now cache files on the server and within Cloudflare’s edge servers. To make life easier on us developers, I built a tool that purges the cache in both places and is conveniently accessible through an API called as part of a grunt job, for example. Without this tool, one of us would have to log into the server, cd to the cache folders, and rm the cache files. Then we’d have to log into Cloudflare, navigate a couple of pages, and manually purge the cache. This is much better.

Result: Repetitive tasks eliminated

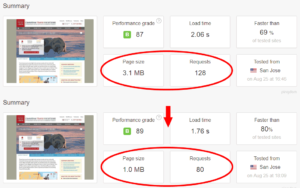

Built a non-WordPress lazy-load system (PHP, JS, HTML)

PHP, GD, JS, HTML

This is a big win because I didn’t have the benefit of WordPress to simply add a lazy-load10 plugin, and I got it done, tested, approved and implemented live in a day. Again using my shim I built a PHP module that parses the DOM, operates on the images attributes that are not excluded with a nolazy class, and are not a descendant of an element with a nolazy class. It also creates a compressed, transparent placeholder of exactly the dimensions of the original image. The front-end JavaScript loads the original image on scroll into the viewport, and only if the image is not hidden by some ancestor element. This has saved huge bandwidth as our sites are image-heavy.

Result: Huge bandwidth savings sitewide, faster pages, better SEO

Implemented a modular package policy vs. one monolithic repo

PHP, Composer, Git

Up until recently, we had been using one monolithic Git repo for a given web site. My team would step on each other’s toes pulling from and merging into dev and then periodically into master. I implemented a policy of converting modular features into local Composer packages that have their own Git version control. This way each package (and thus module or feature) can be owned, developed, unit tested, improved, and maintained independently from the main web site repo. The team now only has to do a composer update on the dev branch on our local machines when we announce updates.

Result: More comfortable development environment

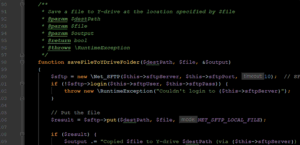

Built an automatic Azure AD signing token rollover updater

PHP, Azure AD

The SSO system would “break” every 30~45 days without warning. The x509 signing keys used by the SAML2 SSO authentication would change without notice, so staff could not log in. Manually I would have to download the federated login metadata XML to extract the signing keys and replace the invalid ones on our servers. I got all hands on deck to help me figure out why this is happening and how to predict the next rollover date, but between five of us I couldn’t figure it out11.

My solution was to write a tool that periodically performs the XML downloading, parsing, diff check, signing key verification, and server key replacement that also reports to Slack12. The system has been running fine ever since. Here is what I eventually found on the problem and why it was hard to deal with:

These rollovers, in which new security certificates are issued by Microsoft, happen approximately every six weeks for those using the Azure AD service to secure access to their applications. – Redmond Magazine (July, 2016)

But then,

[Microsoft] will be increasing the frequency with which we roll over Azure Active Directory’s global signing keys … instead of giving notice to organizations about the coming certificate changes, Microsoft is planning to update these global signing keys without giving notice. – Redmond Magazine (Oct, 2016)

Result: Achieved a complete SSO system without the price tag of a hosted app

Migrated development process to Docker

Linux, Docker, VMWare

When I started the workflow was: develop on a personal MAMP/LAMP stack and hope the FTP’d changes work on the live server. Only older LAMP components13 were used so they would match the versions of the then-outdated live server. There was no backup live server, so the live server was never upgraded in case of broken upgraded packages. It was challenging.

I taught myself Docker on evenings and weekends, then lobbied for company buy-in14. Now we have development and testing servers as isolated Docker stacks where we can test the latest package versions and newest-and-greatest technology.

We now use PHP, Node, Ruby, Sass, Es6, Nginx, Apache, Elasticsearch, MariaDB, Redis, etc. easily and all comfortably within the same physical developer computer(s) between PC and OSX. This was previously impossible. Best of all, the entire Docker recipes are under version control, so any developer on my team can run git clone and docker-compose up to get a local web site and build tools running in just moments.

Result: Greatly saved company time, money, and resources

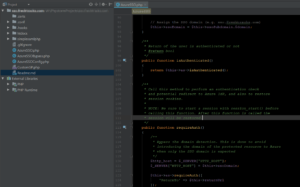

Implemented a policy of using PHP7.2 everywhere

PHP

Most of our codebase is written for PHP 5.4. Since I started, going forward I’ve implemented a policy of using PHP 7.2 everywhere including specifying parameter and return typehints, making all member functions private/protected unless they need to be public, and using minimal-sized functions within classes to build up functionality. Additionally, PHP docblocks are now being used everywhere to explain what a function/class does. Class inheritance is used to ensure DRY code, as well as using interfaces to ensure objects conform to some standard. This has been the hardest accomplishment enforcing PHP 7.2 standards in a place accustomed to PHP 5.4.

Result: Greater code stability and reliability

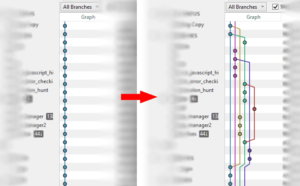

Implemented automated continuous integration (CI) with Github

Git, Docker, Linux

The company has grown. We now have several Stakeholders, a new QA/UAT tester, Content Writers, an Image Curator, and a contract Front-End Developer in addition to myself who handles 100% of the backend. I previously set up a staging server running Docker, but with so many people involved, one server isn’t enough. When combining Github webhooks (messages sent on successful git pushes) with private SSH keys and Docker stacks on different virtual machines, I was able to set up a system where there is now a per-branch15 Docker stack set up to essentially git pull and reset the Docker services on the respective git pushes to Github.

This doesn’t just pull the latest repo changes; my CI system also automatically performs Node and Composer updates, regenerates thumbnails, and resets the DB to a known state.

Result: Greatly saves time, enables large team productivity

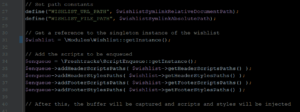

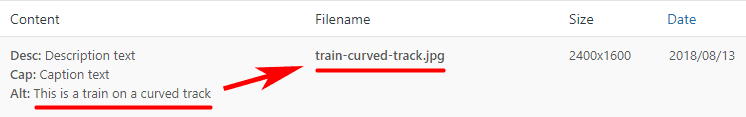

Built an automated image-renamer in WordPress for SEO

WordPress, PHP

It was decided that all our site images need descriptive file names for SEO purposes. Previously they were named like DCIM2345.jpg or img034lg.jpg. Management approved a month of work to manually rename and re-upload all the site images. Instead, I proposed and built a WordPress plugin to automatically rewrite all image file names based on the alt tag or description in the image meta data. I reused my search engine code to remove stop words (a, an, the, etc.) to make pleasing filenames, and combined this with an Nginx rewrite rule. This all took less than a couple hours.

1 2 3 4 5 6 | # Nginx # Rewrite SEO-encoded image URLs like # /files/d/DCIM0001/train-curved-track.jpg # back to # /files/DCIM0001.jpg rewrite ^(/files/)[a-zA-Z]/([^/]+)/.+?\.(jpe?g|png|gif)(?:\?.*)?$ $1$2.$3; |

Result: Reduced a man-month of work to just two hours

2017

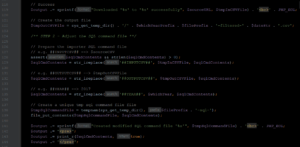

Built an automated CSV/SQL data processing system

PHP, MySQL, Linux

The poor fellow next to me, I found out, had to manually download and import ~100MB of CSV data daily and run dozens of slow MS Access queries manually, then export the results back into several smaller CSV files and save them in different folders for the staff to use. Each morning he would spend an hour doing this task, mostly waiting for MS Access. I thought to myself, “There’s no way we can’t automate this”.

On my own initiative, I set out to automate this, first by building up modules that handle specific tasks like cURLing the CSV file and SFTPing to my dev server and our company shared folders (via a dedicated local SFTP server). I then had to create local accounts and set up firewall rules in a few places to accomplish my goal. I had to create a dedicated MySQL environment on a dev server that could run at 100% CPU for the demanding operations16.

Other modules handle logging operations for debugging and sanity/error handling. Yet another module parses the SQL script into chunks and runs and checks the chunks in sequence. I made the system so the other team can edit/modify the SQL script directly without needing me. Everything was tested and documented. A couple of cron jobs were set up, and the whole system has been running continuously without trouble. It’s now considered a “mission-critical” system and has since expanded to process and post-process more business data.

Result: At least 20 man-hours a month saved

Built and implemented a thumbnail generation API

PHP, PhantomJS, NodeJS

With Photoshop and on a regular basis another team member had to take screenshots of some pages and edit and crop them into thumbnails. There were about 60 or so of these thumbnails that regularly need to be recreated and recreated. They are needed for emailing clients, including them in PDFs, and for displaying search results. Again, I thought to myself, “There’s no way we can’t automate this”. On my own initiative, I set out to automate this. No one had heard of PhantomJS17, but if you have heard of it, then I can happily stop explaining here. It’s essentially a headless WebKit browser that can be programmatically controlled, say, to make thumbnails using clever CSS manipulation, and save them to disk in a batch. More man-hours saved.

Result: Many more man-hours a month saved

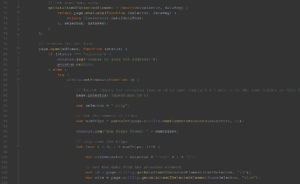

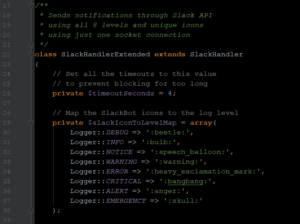

Implemented global error reporting with Slack

PHP, Slack

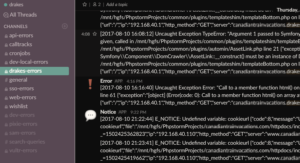

Another initiative I undertook was instant and global error reporting. My team wouldn’t know about errors unless someone noticed a “500 server error” response in the Apache logs, or a web page went blank. There is over a decade of PHP code in production18, so I know silent errors are taking place. My company uses Slack, and since we use it daily for messaging, I thought to myself, “Could I send error messages to Slack?”.

First I figured out how to make a Slackbot, then I installed a PSR-3 logger called monolog using composer. Luckily there exists a log handler that logs to Slack or else I was going to code one. The final step was to register my custom19 logger in a global error handler. This handler is always available on every PHP page request via php_value auto_prepend_file "..." in a vhost file. Now my team knows every error, every warning, and every deprecation instantly. This has led to a massive update in our code base for the better. Since this time, it has expanded into a full monitoring system for our APIs and cron jobs. It has become another mission-critical system.

Result: Real-time awareness of system state and issues

Built and implemented a wishlist system

HTML, JS, CSS, PHP, MySQL

My first official project20 was to created a wishlist system from a whitepaper. It was a project that had been in the works for several months but had indefinitely stalled. It works basically the same as how Airbnb uses little hearts on their properties to “like” or save a listing for later viewing. I started over from scratch as it is a very complex system: It handles saving lists, emailing lists, sharing them, and reminding visitors they have a wishlist, plus internal reporting. I prepared flowcharts, laid out a schedule, and followed my schedule. I’m proud to say I had it production-ready in just under three weeks.

Result: First official project completed in record time

Built an output buffering manipulation shim

PHP, HTML

Before we started using git for feature branching, I needed a way to develop, test and integrate my above wishlist system into our existing sites. To recap the state of affairs, we weren’t using git branches for teamwork yet, and the main site’s development code was constantly in flux with A/B testing. So, I built a tool, or rather shim21, that silently buffers the PHP output, and just before it is sent to the browser, that output comes to my shim. The shim modifies the output in order to inject my wishlist code and dependencies cleanly into the head, body, and footer of the HTML. My PHP scripts are also processed here. This resulted in a complete decoupling of the existing code and the new wishlist code. This shim has remained and since evolved to perform more and more post-processing and A/B testing.

Result: New features can be rapidly prototyped

Implemented an in-house GeoIP system for speed

PHP, Linux, Sqlite

One day I noticed that there were ‘XX’ entries instead of country entries (e.g. ‘CA’, ‘US’) in our visitor tracking system. I was told it was because the free 3rd-party GeoIP API we were calling on every page request was having problems and/or timing out. This slowed down our web site because PHP cURL requests are blocking function calls22. This means that our web pages had added lag time proportional to the round-trip time it took to call the GeoIP API and get a response back. When the GeoIP provider had server troubles, we had server troubles. It wasn’t a priority, but on my own I implemented the public version of MaxMind Country DB in-house. That means we have a local copy of the GeoIP DB that many free providers also use. I quickly set up a decent API on our end that our own code can query. I also set up a cron job that checks for DB updates and installs them. Eventually, when we changed DNS providers to Cloudflare, I also use the HTTP_CF_IPCOUNTRY header provided on every Cloudflare request as a backup/confirmation. We’ve had no more lag issues related to GeoIP since.

Result: Site speed improved as 3rd-party API use was eliminated

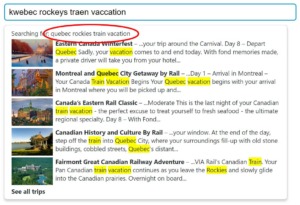

Designed and implemented a typeahead search engine

PHP, HTML, JS, MySQL

Just after New Year’s in 2017, a coworker lamented that we don’t have a search bar on any of our web sites. I thought, “That can’t be right, can it?” I mean, just about every web site has a search bar or form, and that is standard for every WordPress installation too. Sure enough, we had zero semantic23 search functionality on any of our sites. I found out that no one knew how to begin that feature, since our sites are not on a framework like WordPress or Joomla, so it wasn’t on the development road map.

At this time the office was in transition from an old office to a bigger and shinier office, so desks, people, cables and PCs were being moved around. No intense work was scheduled for a couple of weeks; I took this opportunity to research search engine projects on Github (e.g. TNTSearch, Twitter Typeahead), and not only build and implement one but make it user-friendlier. The engine I built can recognize spelling and phonetic variations. For example, if you search for “Bamf” it will return results on “Banff”. The same for “Kwebec” – it will find “Quebec”. Marketing now uses this tool to see what people are searching for to plan new products.

Result: Visitors can search our sites conveniently

Created an XML editor for non-technical users

JS, PHP, Git

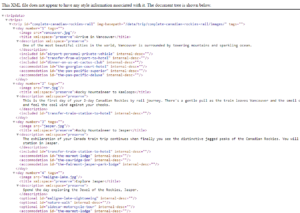

We had a big challenge. Each of our product details is stored as a monolithic text block in a single text area in a very outdated MS Windows file manager. It is impossible to display our products on the web site in any way but as paragraphs with very limited styling. From the Top of the company, we were told that we will use a given template to nicely display the product information, and it has to include images and be populated dynamically, and we will still only have that single text area to work with, and we will have just have one week to do it.

I decided the only way we could make this work was to store the details of our products in XML along with image paths, and store that XML data in the text area in the outdated file manager. Then on the PHP side, an XML processor could pull out the nodes and populate the template that was given to us. Now, I could edit and maintain an XML file, and my team can also properly edit an XML file, but the catch is that non-technical people need to add product details too24. Within a week I built a user-friendly XML editor tailored to the task, and with a locking mechanism to prevent multiple people editing simultaneously. It had validation and helpful error messages. I also built in version control backed by git to automatically keep any changes for an audit.

Result: Solved a stubborn data representation problem

Implemented GoAccess real-time web log analyzer

Linux, Apache

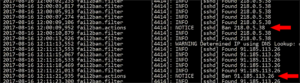

I personally wanted to reduce server bandwidth to make our web sites faster and to reduce server costs. The problem was, we had no idea where our bandwidth was going, just that each day about 12 GB of traffic was flowing to and from our production server. To that point, we had hundreds of megabytes of log files each week and no one was looking at them. I was also interested in the latest hacking efforts against our sites, but a human just can’t pour over those huge logs. Since the server is my baby, I found a way to analyze in real-time the logs by installing a daemon called GoAccess. I detail installing the GoAccess real-time web log analyzer for CentOS here.

The use of this tool has changed how we look at the server. We now know instantly what 404’d requests come in, if there are 500’d responses, what redirect chains we should fix, what kind of bots visit us, how often GoogleBot comes, and most importantly, how much bandwidth our assets are using, and in high detail. For example, we found an uncompressed image on every page that was costing us 2 GB a day25.

Result: Sever bandwidth usage is fully understood

Built a web site visitor behavior tracker

JS, PHP, MySQL

We have Google UA and Hotjar for visitor analysis, but what we’d really like to do is follow a visitor as he/she moves through the site(s) and trigger helpful messages and events after some positive behavior has been observed. For example, if a visitor has an affinity for a certain kind of product page, then we can display a promotion to him/her on subsequent visits to the site.

The system I built tracks time on page, clicks/taps, movement through the site, number of visits, search queries, and many more touchpoints. It all feeds to a dedicated database that processes the data for each visitor in real-time, the results of which are fed back into the “feedback” system of the site(s) – this decides on displaying promotions, pop-ups, a chatbox, and on.

Result: Better understanding of visitors, increased sales

Designed and built a smart, automatic PDF generator

PHP, ChromeJS, NodeJS, JS, Linux

I noticed that our PDFs for customers didn’t look so great. Sometimes tables were cut off, and/or pictures were pushed to the next page leaving a headline floating alone on the previous page. I found out this problem had existed for years. My company had tried several solutions from manually using PageMaker, InDesign and Lucidpress, to using HTML with a paid commercial PDF generation tool. The problem is related to that monolithic text storage I talked about earlier, and how we cannot style it. Until my solution, the company accepted the PDFs as they were.

I thought to myself, “There has to be a way to make these PDFs prettier, automatically.” Truth be told, this was not an official project26, so I worked on this problem after work and at home, all on my own time; I knew I could solve this. I attempted two JavaScript-based positioning algorithms – both failed – before using machine vision to finally solve the problem. I set up Linux scripts, headless Chrome, NodeJS, Javascript and PHP to make a tool fronted by an API to generate hundreds of very pretty PDFs on demand. These are now the PDFs we send to clients.

Result: PDFs look more professional, more recurring man-hours saved

2016

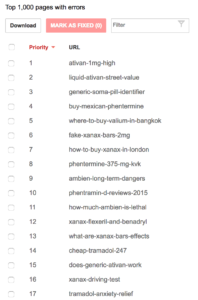

Discovered and cleaned out server hacks

Linux, PHP

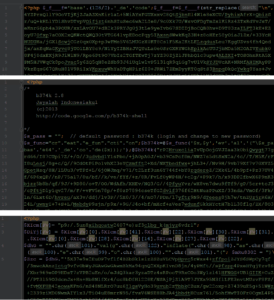

My first task was to find how our non-WordPress sites were hit with a pharma hack27, and how it went undetected for at least 3 months. The sites were still infected when I joined. I have non-casual knowledge of attack vectors, so I could grep PHP files for the usual suspects: eval, base64, assert, exec, and better-obfuscated variants. I found four unique web shell scripts of differing complexity peppered throughout the sites – 47 shell scripts in total – leading me to believe that we were hacked by four different actors. The PHP codebase used mysql* functions (mysqli* functions on PDO and prepared statements are strongly recommended), but fortunately none of the pages derive content from the database. I expand more about this hack here.

Result: Hacked files removed, SEO efforts saved, visitor trust restored

Implemented better server security (Linux, PHP)

Linux, PHP

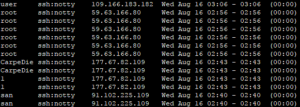

My next order of business was to lock down port 22 which was how root access was obtained28. From the logs and lastb29 I could see that port 22 was being hit up to 60,000 times a minute from all over the world, and the root password was not complex at all (until I changed it).

Daily, we don’t need root access, so I set the root account to be denied SSH login, period. I then installed and setup Fail2Ban to drop that deluge of traffic30. Next I setup low-access accounts (certificate-based) for those that need server access, including myself, and say to perform a git pull. To become root we have to su up. These efforts have drastically dropped break-in attempts. On the PHP side, I disabled most harmful functions in php.ini, and filter $_REQUEST on every page request using a prepended script via php_value auto_prepend_file "..." in a vhost file.

Result: Security greatly improved

Implemented reasonable penetration monitoring

Linux, Git

I initially set up inotify31 to monitor file changes to the server in key folders, but a watch needs to be set up for every folder that I want to be monitored. A better solution I made is to lock down permissions properly throughout the server, disable execute permissions in temp and data folders, and only add and update files via git pull or git checkout. The elegance of this solution is that if anyone on my team performs a git pull, any changed files outside of the branch on the server will show up when git requests that we “commit or stash” the changes first. This also helps prevent hot-patching via FTP of web files outside of version control. Also, a cron job runs git status in a few places expecting the “files up to date” message. Any new files that need to be committed are treated as suspicious and reported.

Result: Increased defense against unauthorized file system changes

Set up an enterprise server with migration

Linux, MySQL, Apache, PHP

The production server was set up years ago, had an older kernel, PHP 5.4, and abandoned files and folders. I recommended we start over, so we did. I planned and performed a migration from our old server to a new CentOS server with all the latest updates. I was trusted with the IP and root access to a new blade server – a very nice server – that only had out-of-the-box CentOS installed, and I set it up from scratch. I had the time to do a thorough job with security, issuing certificates, setting up git repos32, DB migration, separating out web site logs, setting up log file rotations, setting up the firewall, setting backups on a schedule, and on. I treat this production server as my baby33.

Result: Web site speed improved, security further increased

Implemented git teamwork practices

Git

I introduced to my team more useful features of git and implemented a git teamwork workflow. My team now uses git branching for testing features and creating web site patches and updates. We can use the so-called git blame feature to view file change histories of given files. We can test features, test on development machines, test in virtual environments, merge features from A/B tests or delete them, roll back to previous commits, squash commits, and git pull and git merge into master with ease. This has sped up development greatly.

Result: Team efficiency and speed increased

More wins coming…

Notes:

- Azure AD is a challenge to work with in terms of both how its UI is laid out, and how to perform the SAML token exchange smoothly. ↩

- Pingdom Website Speed Test – https://tools.pingdom.com/ ↩

- Again, the code base is over a decade of code that has passed through many hands. ↩

- Cloudflare is not a magic bullet for web site speed; it does help a lot though. ↩

- Web application firewall – it can prevent things like large blocks of base64 code from being uploaded ↩

- Search engine ranking ↩

- Later, as a team, we worked on refactoring JavaScript and only loading CSS and JS on demand when needed, not on every page. ↩

- One golden rule of HTML parsing is to never, ever use regex. Always use a DOM/XML parser to be safe. ↩

- See http://www.browserscope.org/?category=network&v=top for connection limits of various browsers. ↩

- This is where only the visible images are loaded. Hidden images below the “fold” have deferred loading until they come into view. This speeds up the initial page load and is good for visitors and SEO. ↩

- The Azure AD portal is very difficult to navigate, and we were unable to find any semblance of rollover dates listed. ↩

- Apparently hosted AAD apps, apps that use Application Proxy, and AAD B2C apps have automatic updates, probably with a substantial price tag attached. ↩

- PHP 5.4 and Apache 2.2 well into 2017! ↩

- The push back was strong because no one had ever heard of Docker ↩

- Branches: staging, development, hotfixes, edge ↩

- There were hundreds of SQL statements creating temporary tables at each stage. A different department kept the process this way for ease of updating the SQL. ↩

- Later I switched to headless Chrome, but at this time PhantomJS was the standard in headless browser automation. ↩

- Again, I had just started with this company, but the code base had built up over the years and has passed through several hands and developers. ↩

- I customized it to use different icons in Slack for the different log levels, like notice, warning, critical, etc., and I also implemented batching and threshold logging to avoid getting endless “deprecated” notices sent to Slack. ↩

- The above projects, server migration, security, automation, etc. are challenges I took up on my own. ↩

- A shim is a helper tool until official support is adopted, and I’m referring to using git for teamwork which we eventually did. ↩

- Blocking calls, versus asynchronous function calls, have to wait until the request finishes to continue processing the rest of the code or serve the web page. ↩

- We had no search bar or a way for a visitor to type what they want to search for ↩

- This idea is terrifying to me because it is so easy for a non-technical person to forget a backslash or use improperly nested quotes, or type an attribute wrong, and so many other ways to damage an XML file. ↩

- The actual website code base belongs to other team members. I’m only a few months into this company at this point, so I don’t interact with this codebase much. What I did notice, however, is that we weren’t caching anything at this time. That has since changed. ↩

- I was told “not to spend time on this.” ↩

- The purpose of the pharma hack is to make pharmaceutical sales sites they are promoting appear higher in Google results than they otherwise would. ↩

lastonly goes back so far, but grepping the ‘/var/log/secure’ logs for ‘successful’ and noting the IP addresses tells the story ↩- This command lists all unsuccessful login attempts ↩

- F2B only supports IPv4 at this time, but fortunately most IPs reaching port 22 are IPv4 ↩

- This neat Linux subsystem watches for file changes and can report accordingly ↩

- We didn’t really use git for version control at this time, just for casual backups. ↩

- I treated it as my baby until we laid out an AWS server plan some time later. ↩